BinarySearchTrees are a fine idea, but they only work if they are balanced---if moving from a tree to its left or right subtree reduces the size by a constant fraction. Balanced binary trees add some extra mechanism to the basic binary search tree to ensure balance. Finding efficient ways to balance a tree has been studied for decades, and several good mechanisms are known. We'll try to hit the high points of all of them.

1. The basics: tree rotations

The problem is that as we insert new nodes, some paths through the tree may become very long. So we need to be able to shrink the long paths by moving nodes elsewhere in the tree.

But how do we do this? The basic idea is to notice that there may be many binary search trees that contain the same data, and that we can transform one into another by a local modification called a rotation:

y x / \ <==> / \ x C A y / \ / \ A B B C Single rotation on x-y edge

If A < x < B < y < C, then both versions of this tree have the binary search tree property. By doing the rotation in one direction, we move A up and C down; in the other direction, we move A down and C up. So rotations can be used to transfer depth from the leftmost grandchild of a node to the rightmost and vice versa.

But what if it's the middle grandchild B that's the problem? A single rotation as above doesn't move B up or down. To move B, we have to reposition it so that it's on the end of something. We do this by splitting B into two subtrees B1 and B2, and doing two rotations that split the two subtrees while moving both up. For this we need to do two rotations:

z z y / \ ===> / \ ===> / \ x C y C x z / \ / \ /| |\ A y x B2 A B1 B2 C / \ / \ B1 B2 A B1 Double rotation: rotate xy then zy

2. AVL trees

Rotations in principle let us rebalance a tree, but we still need to decide when to do them. If we try to keep the tree in perfect balance (all paths nearly the same length), we'll spend so much time rotating that we won't be able to do anything else.

AVL trees solve this problem by maintaining the invariant that the heights of the two subtrees sitting under each node differ by at most one. This does not guarantee perfect balance, but it does get close. Let S(k) be the size of the smallest AVL tree with height k. This tree will have at least one subtree of height k-1, but its other subtree can be of height k-2 (and should be, to keep it as small as possible). We thus have the recurrence

- S(k) = 1 + S(k-1) + S(k-2)

which is very close to the Fibonacci recurrence.

It is possible to solve this exactly using GeneratingFunctions. But we can get close by guessing that S(k) ≥ ak for some constant a. This clearly works for S(0) = a0 = 1. For larger k, compute

S(k) = 1 + ak-1 + ak-2 = 1 + ak (1/a + 1/a2) > ak (1/a + 1/a2).

This last quantity is at least ak provided (1/a) + 1/a2) is at least 1. We can solve exactly for the largest a that makes this work, but a very quick calculation shows that a = (3/2) works: 2/3 + 4/9 = 10/9 > 1. It follows that any AVL tree with height k has at least (3/2)k nodes, or conversely that any AVL tree with (3/2)k nodes has height at most k. So the height of an arbitrary AVL tree with n nodes is no greater than log3/2 n = O(log n).

How do we maintain this invariant? The first thing to do is add extra information to the tree, so that we can tell when the invariant has been violated. AVL trees store in each node the difference between the heights of its left and right subtrees, which will be one of -1, 0, or 1. In an ideal world this would require lg 3 ≅ 1.58 bits per node, but since fractional bits are difficult to represent on modern computers a typical implementation uses two bits. Inserting a new node into an AVL tree involves

- Doing a standard binary search tree insertion.

- Updating the balance fields for every node on the insertion path.

- Performing a single or double rotation to restore balance if needed.

Implementing this correctly is tricky. Intuitively, we can imagine a version of an AVL tree in which we stored the height of each node (using O(log log n) bits). When we insert a new node, only the heights of its ancestors change---so step 2 requires updating O(log n) height fields. Similarly, it is only these ancestors that can be overtall. It turns out that fixing the closest ancestor fixes all the ones above it (because it shortens their longest paths by one as well). So just one single or double rotation restores balance.

Deletions are also possible, but are uglier: a deletion in an AVL tree may require as many as O(log n) rotations. The basic idea is to use the standard BinarySearchTree deletion trick of either splicing out a node if it has no right child, or replacing it with the minimum value in its right subtree (the node for which is spliced out); we then have to check to see if we need to rebalance at every node above whatever node we removed.

If we are not fanatical about space optimization, we can just keep track of the heights of all nodes explicitly, instead of managing the -1, 0, 1 balance values. An example of an implementation that uses this approach is given on the page C/AvlTree.

3. 2–3 trees

An early branch in the evolution of balanced trees was the 2–3 tree. Here all paths have the same length, but internal nodes have either 2 or 3 children. So a 2–3 tree with height k has between 2k and 3k leaves and a comparable number of internal nodes. The maximum path length in a tree with n nodes is at most ceiling(lg n), as in a perfectly balanced binary tree.

An internal node in a 2–3 tree holds one key if it has two children (including two nil pointers) and two if it has three children. A search that reaches a three-child node must compare the target with both keys to decide which of the three subtrees to recurse into. As in binary trees, these comparisons take constant time, so we can search a 2–3 tree in O(log n) time.

Insertion is done by expanding leaf nodes. This may cause a leaf to split when it acquires a third key. When a leaf splits, it becomes two one-key nodes and the middle key moves up into its parent. This may cause further splits up the ancestor chain; the tree grows in height by adding a new root when the old root splits. In practice only a small number of splits are needed for most insertions, but even in the worst case this entire process takes O(log n) time.

It follows that 2–3 trees have the same performance as AVL trees. Conceptually, they are simpler, but having to write separate cases for 2-child and 3-child nodes doubles the size of most code that works on 2–3 trees. The real significance of 2–3 trees is as a precursor to two other kinds of trees, the red-black tree and the B-tree.

4. Red-black trees

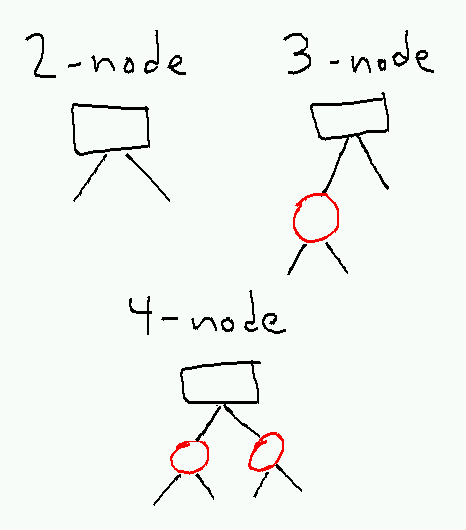

A red-black tree is a 2–3–4 tree (i.e. all nodes have 2, 3, or 4 children and 1, 2, or 3 internal keys) where each node is represented by a little binary tree with a black root and zero, one, or two red extender nodes as follows:

The invariant for a red-black tree is that

- No two red nodes are adjacent.

- Every path contains the same number of black nodes.

For technical reasons, we include the null pointers at the bottom of the tree as black nodes; this has no effect on the invariant, but simplifies the description of the rebalancing procedure.

From the invariant it follows that every path has between k and 2k nodes, where k is the "black-height," the common number of black nodes on each path. From this we can prove that the height of the tree is O(log n).

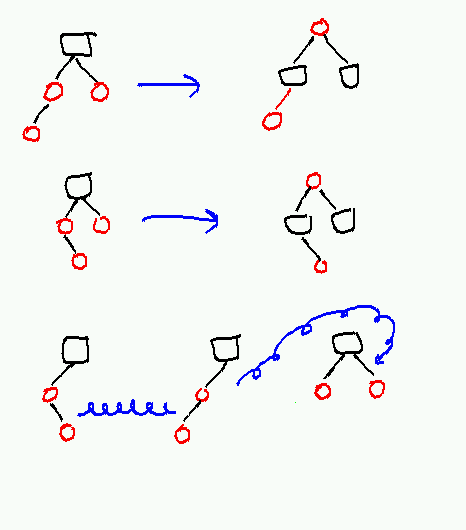

Searching in a red-black tree is identical to searching in any other binary search tree; we simply ignore the color bit on each node. So search takes O(log n) time. For insertions, we use the standard binary search tree insertion algorithm, and insert the new node as a red node. This may violate the first part of the invariant (it doesn't violate the second because it doesn't change the number of black nodes on any path). In this case we need to fix up the constraint by recoloring nodes and possibly performing a single or double rotation.

Which operations we need to do depend on the color of the new node's uncle. If the uncle is red, we can recolor the node's parent, uncle, and grandparent and get rid of the double-red edge between the new node and its parent without changing the number of black nodes on any path. In this case, the grandparent becomes red, which may create a new double-red edge which must be fixed recursively. Thus up to O(log n) such recolorings may occur at a total cost of O(log n).

If the uncle is black (which includes the case where the uncle is a null pointer), a rotation (possibly a double rotation) and recoloring is necessary. In this case (depicted at the bottom of the picture above), the new grandparent is always black, so there are no more double-red edges. So at most two rotations occur after any insertion.

Deletion is more complicated but can also be done in O(log n) recolorings and O(1) (in this case up to 3) rotations. Because deletion is simpler in red-black trees than in AVL trees, and because operations on red-black trees tend to have slightly smaller constants than corresponding operation on AVL trees, red-black trees are more often used that AVL trees in practice.

5. B-trees

Neither is used as much as a B-tree, a specialized data structure optimized for storage systems where the cost of reading or writing a large block (of typically 4096 or 8192 bytes) is no greater than the cost of reading or writing a single bit. Such systems include typical disk drives, where the disk drive has to spend so long finding data on disk that it tries to amortize the huge (tens of millions of CPU clock cycles) seek cost over many returned bytes.

A B-tree is a generalization of a 2–3 tree where each node has between M/2 and M-1 children, where M is some large constant chosen so that a node (including up to M-1 pointers and up to M-2 keys) will just fit inside a single block. When a node would otherwise end up with M children, it splits into two nodes with M/2 children each, and moves its middle key up into its parent. As in 2–3 trees this may eventually require the root to split and a new root to be created; in practice, M is often large enough that a small fixed height is enough to span as much data as the storage system is capable of holding.

Searches in B-trees require looking through logM n nodes, at a cost of O(M) time per node. If M is a constant the total time is asymptotically O(log n). But the reason for using B-trees is that the O(M) cost of reading a block is trivial compare to the much larger constant time to find the block on the disk; and so it is better to minimize the number of disk accesses (by making M large) than reduce the CPU time.

6. Splay trees

Yet another approach to balancing is to do it dynamically. Splay trees are binary search trees in which every search operation proceeds by rotating the target to the root. If this is done correctly, the amortized cost (see AmortizedAnalysis) of each tree operation is O(log n), although particular rare operations might take as much as O(n) time. Splay trees require no extra space because they store no balancing information; however, the constant factors on searches can be larger because every search requires restructuring the tree. For some applications this additional cost is balanced by the splay tree's ability to adapt to data access patterns; if some elements of the tree are hit more often than others, these elements will tend to migrate to the top, and the cost of a typical search will drop to O(log m), where m is the size of the "working set" of frequently-accessed elements.

For more details on splay trees, see SedgewickSeries, the original paper, or any number of demos, animations, and other descriptions that can be found via Google.

7. Skip lists

Skip lists are yet another balanced tree data structure, where the tree is disguised as a tower of linked lists. They are described on their own page (SkipLists).

8. Implementations

AVL trees and red-black trees have been implemented for every reasonable programming language you've ever heard of. For C implementations, a good place to start is at http://adtinfo.org/.