Lagrange multipliers are a technique for finding a maximum or minimum of some function f(x) (where x may be a vector) subject to a constraint of the form g(x) = 0. A typical application might be optimally allocating resources to maximize some function f(x,y,z) with a resource constraint g(x,y,z) = x+y+z - c = 0.

The technique generalizes the standard high-school calculus technique of finding extrema by looking for values of x where the first derivative of f is zero; the difference is that now instead of having just a single-dimensional x, we have a multi-dimensional x that may encode many different variables. But the basic intuition is still the same: in order to be at a maximum or minimum, we have to be at a point where a small step will not change the value of f.

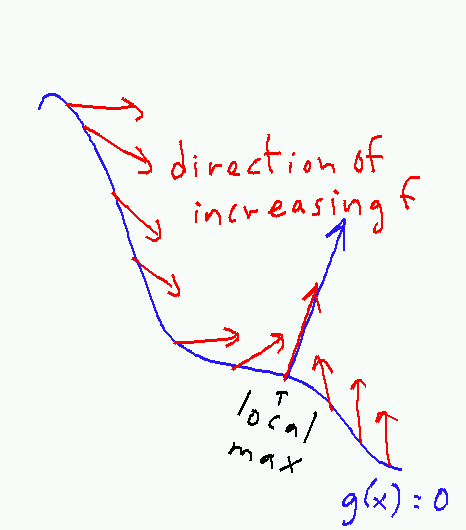

How do we express this in high dimension? It may help to think of the g(x)=0 surface as a hill, where gravity is always perpendicular to the local landscape. At each point we can compute (by taking partial derivatives with respect to each coordinate in x) the direction in which f(x) increases the most. If this direction is not straight up or straight down, we can increase f(x) by walking in some direction (and decrease it by walking in the opposite direction). So we are looking for a point where the direction of increase of f is exactly aligned with the perpendicular to the g(x)=0 surface, which happens to point precisely in the direction of increase of g.

To find this point, we compute partial derivatives. If you're not familiar with partial derivatives, the way to think about them is being essentially the same as regular derivatives where one assumes that every variable except the one we are differentiating against is treated as a constant. So the partial derivative of xy2 with respect to x, written ∂xy2/∂x, is just y2, and the partial derivative of y2 with respect to y, written ∂xy2/∂y, is 2xy. The symbol in the expression is a variant form of the Greek lowercase delta (which you may not be able to see if your browser doesn't like Unicode).

Given a real-valued function f(x,y) (or more generally f(x1,x2,...,xn)), its gradient (written ∇f) or direction of increase is the vector-valued function ∇f = (∂f(x,y)/∂x,∂f(x,y)/∂y) (in general (∂f(x)/∂x1,∂f(x)/∂x2,...f(x)/∂xn)). For example, the function xy2 above has gradient (y2,2xy). This represents an arrow that points in some direction for each value of x and y; it points in the same direction as some other arrow if each coordinate is a nonzero constant multiple of the corresponding coordinate of the other arrow. In the context of function maximization, this nonzero constant multiple (which is usually written as a lowercase lambda but which will be written here as L because I am tired of looking up numerical codes for Greek letters) is called the Lagrange multiplier, and to find a place where ∇f and ∇g point in the same direction, we are looking for a place where ∂f(x,y)/∂x = L ∂g(x,y)/∂x and ∂f(x,y)/∂y = L ∂g(x,y)/∂y for the same value of L.

Let's take a simple example. Suppose that f = xy2 as above and that g(x,y) = x+y-1. Here we want to find (x,y) with x+y=1 that maximizes f. Since we have only two variables, we could rewrite f(x,y) as f(x,1-x), but for larger problems or more complicated g this may not work. So let's try Lagrange multipliers. We have already computed the gradient of f. It is easy to see that the partial derivative of g with respect to either argument is 1, so we want to find x,y, and L such that:

y2 = L

and

- 2xy = L.

Here we can simply equate

y2 = 2xy

and observe that this has solutions y = 2x and y=0. The y=0 solution gives L=0, which doesn't work (since you can always make the gradients equal by setting L=0), so that leaves us with y=2x. Since we also know that x+y=1, we have

- x = 1/3, y = 2/3, f(x,y) = 4/27.

And at this point we can reasonably argue that since (1/3, 2/3) is the only extremum of this function, and since f(x,y) takes on smaller values elsewhere (e.g. f(1,0) = 0), then (1/3,2/3) must in fact be the global maximum.

1. Some more complicated examples

1.1. A product

Suppose we want to maximize P = ∏ xi, where i=1..n, subject to S = ∑ xi = c, for some constant c and xi≥0 for all i.

Compute ∂P/∂xi = ∏i≠j xj = P/xi (provided xi≠0) and ∂S/∂xi = 1. So we have P/xi = λ for all i. Even without knowing what λ is, we can equate P/xi = P/xj which implies xi = xj for all i, j. But then S = ∑ xi = nxi = c gives xi = n/c for all i. This gives P = (n/c)^n^. We can observe that this is likely to be a maximum because for other values of xi (e.g. when some xi,, = 0) we get smaller values of P.

1.2. A scheduling problem

Suppose that we have n jobs that we want to schedule on m parallel machines (say, cores in a multicore CPU). The machines have different speeds, so if we assign ni jobs to machine i, it will take ni/si time units to finish all of them. Our goal is to minimize the sum over all jobs of the time until it is finished. To make things easier, we'll assume that each machine refuses to return its jobs until they are all done, so that the total cost for all jobs assigned to machine i is ni2/si.

In Lagrange multiplier terms, we want to minimize f = ∑ ni^2/si subject to g = ∑ ni = n. We can easily compute ∂f/∂ni = 2ni/si and ∂g/∂ni = 1. So we want 2ni/si = λ for all i, or ni = λsi/2. Here it may help to solve for λ: since ∑ ni = ∑ λsi/2 = n, we get λ = 2n/(∑si) and each ni = 2sin/2∑si = n(si/∑si). So each machine is assigned jobs in proportion to its speed.