For more up-to-date notes see http://www.cs.yale.edu/homes/aspnes/classes/465/notes.pdf.

Contents

1. Synchronous leader election in rings

See AttiyaWelch chapter 3 or LynchBook Chapter 3 for details.

Basic ideas:

- Want a single process to declare itself leader.

- No leader election without breaking symmetry [Angluin 1980]. Proof is that if everybody is in the same state at every step, they all put on the crown at the same time.

With ordered identities, a simple algorithm due to Le Lann, Chang, and Roberts solves the problem in O(N) time with O(N2) messages: I send out my own id clockwise and forward any id bigger than mine. If I get my id back I win. Works with a unidirectional ring, doesn't require synchrony, never produces multiple leaders.

On a bidirectional ring we can get O(N log N) messages and O(N) time with power-of-2 probing [Hirschbirg and Sinclair 1980]. Complexity analysis is mildly painful but basically comes down to the fact that any node that sends a message 2k hops had to be a winner at phase 2k-1, which means that it is the largest of some group of 2k-1 ids. Thus the 2k-hop senders are spaced at least 2k-1 away from each other and there are at most N/2k-1 of them. Summing up over all O(log N) phases we get ∑k 2k N / 2k-1 = O(N log N) messages and ∑k 2k = O(N) time.

- Evil trick: if we have synchronized starting, known N, and known id space, we can have process with id i wait until round i*N to start sending its id around, and have everybody else drop out when they receive it; this way only one process (the one with smallest id) ever starts a message and only N messages are sent. But the running time can be pretty bad.

1.1. Details of LCR

Formally, we'll let the state space for each process i consist of two variables: leader, initially 0, which is set to 1 if i decides it's a leader; and maxId, the largest id seen so far. We assume that i denotes i's position rather than its id, which we'll write as idi. We will also treat all positions as values mod N, to simplify the arithmetic.

Code for LCR is:

initially, leader ← 0, maxId ← idi, send i to clockwise neighbor.

- upon receiving j:

if j = idi, set leader ← 1.

if j > maxId, set maxId ← j and send j to right neighbor.

1.1.1. Proof of correctness

By induction on the round number k. The induction hypothesis is that at the in round k, each process i's leader bit is 0, its maxId value is equal to the largest id in the range (i-k)..i, and that it sends idi-k if and only if idi-k is the largest id in the range (i-k)..i. The base case is that when k = 0, maxId = idi is the largest id in i..i, and i sends idi. For the induction step, observe that in round k-1, i-1 sends id(i-1)-(k-1) = idi-k iff it is the largest in the range (i-k)..(i-1), and that i adopts it as the new value of maxId and sends it just in case it is larger than the previous largest value in (i-k+1)..(i-1), i.e. if it is the largest value in (i-k)..i.

Finally, in round N-1, i-1 sends idi-N = idi if and only if i is the largest id in (i-N)..i-1, the whole state space. So i receives idi and sets leaderi = 1 if and only if it has the maximum id.

1.1.2. Performance

It's immediate from the correctness proof that the protocols terminates after exactly N+1 rounds.

To count message traffic, observe that each process sends at most 1 message per round, for a total of O(N2) messages. This is a tight bound since if the ids are in decreasing order N N-1 N-2 ... 1, then no messages get eaten until they hit N.

1.2. Details of Hirschbirg and Sinclair

Basically the same as for LCR but both the protocol and the invariant get much messier. To specify the protocol, it may help to think of messages as mobile agents and the state of each process as being of the form (local-state, { agents I'm carrying }). Then the sending rule for a process becomes ship any agents in whatever direction they want to go and the transition rule is accept any incoming agents and update their state in terms of their own internal transition rules. An agent state for LCR will be something like (original-sender, direction, hop-count, max-seen) where direction is R or L depending on which way the agent is going, hop-count is initially 2k when the agent is sent and drops by 1 each time the agent moves, and max-seen is the biggest id of any node the agent has visited. An agent turns around (switches direction) when hop-count reaches 0.

To prove this works, we can mostly ignore the early phases (though we have to show that the max-id node doesn't drop out early, which is not too hard). The last phase involves any surviving node probing all the way around the ring, so it will declare itself leader only when it receives its own agent from the left. That exactly one node does so is immediate from the same argument for LCR.

To prove the message bound, compute the sum of all active agents per phase times messages sent as above.

1.3. Lower bounds

We'll do the easiest case: an Omega(N log N) lower bound on messages for synchronous-start comparison-based algorithms in bidirectional synchronous rings. For full details see LynchBook Section 3.6, AttiyaWelch Section 3.4.2, or the original paper in JACM by Frederickson and Lynch.

Basic ideas:

Two fragments i..i+k and j..j+k of a ring are order-equivalent if idi+a > idi+b iff idj+a > idj+b for b = 0..k.

An algorithm is comparison-based if it can't do anything to IDs but copy them and test for <. The state of such an algorithm is modeled by some non-ID state together with a big bag of IDs, messages have a pile of IDs attached to them, etc. Two states/messages are equivalent under some mapping of IDs if you can translate the first to the second by running all IDs through the mapping. Alternate version: Executions of p1 and p2 are similar if they send messages in the same direction(s) in the same rounds, declare themselves leader at the same round; an algorithm is comparison-based based if order-equivalent rings yield similar executions for corresponding processes. This can be turned into the explicit-copying-IDs model by replacing the original protocol with a full-information protocol in which each message is replaced by the ID and a complete history of the sending process (including all messages it has every received).

Define an active round as a round in which at least 1 message is sent. Claim: actions of i after k active rounds depends up to an order-equivalent mapping of ids only on the order-equivalence class of ids in i-k..i+k (the k-neighborhood of i). Proof: by induction on k. Suppose i and j have order-equivalent (k-1)-neighborhoods; then after k-1 active rounds they have equivalent states by ind. hyp. In inactive rounds i and j both receive no messages and update their states in the same way. In active rounds, i and j receive order-equivalent messages and update their states in an order-equivalent way.

- If we have an order of ids with a lot of order-equivalent k-neighborhoods, then after k active rounds if one process sends a message, so do a lot of other ones.

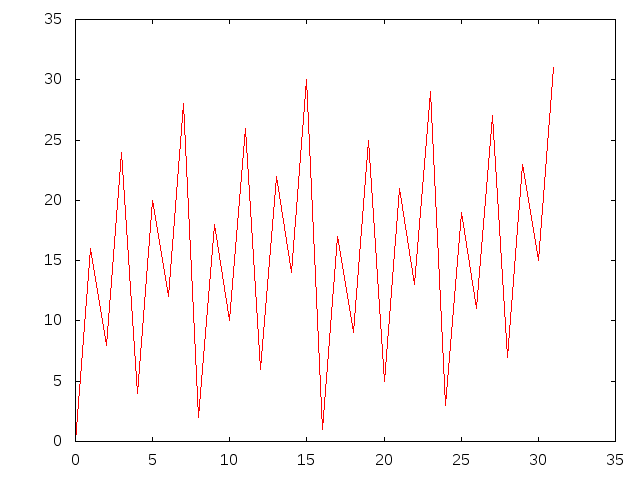

Now we just need to build a ring with a lot of order-equivalent neighborhoods. For N a power of 2 we can use the bit-reversal ring e.g. 000 100 010 110 001 101 011 111. Here's a picture of what this looks like for N=32:

For N not a power of 2 we look up Frederickson and Lynch or Attiya et al. In either case we get Omega(N/k) order-equivalent members of each equivalence class after k active rounds, giving Omega(N/k) messages per active round, which sums to Omega(N log N).

For non-comparison-based algorithms we can still prove Omega(N log N) messages for time-bounded algorithms, but it requires Ramsey_theory. See AttiyaWelch Section 3.4.2 or LynchBook Section 3.7. Here "time-bounded" means that the running time can't depend on the size of the ID space. The intuition is that for any fixed protocol, if the ID space is larger enough, then (using Ramsey theory) there exists a subset of the ID space where the protocol acts like a comparison-based protocol. So the existence of a O(f(N))-message time-bounded protocol implies the existence of an O(f(N))-message comparison-based protocol, and from the previous lower bound we know f(N) is Omega(N lg N). Note that time-boundedness is necessary: we can't prove the lower bound for non-time-bounded algorithms because of the i*N trick.

2. Synchronous leader election in general networks

Basic assumptions:

- Strongly-connected network, i.e. there is a path from every node to every other node.

Upper bound on the diameter (max length of shortest path from one node to another) is known.

Mechanism is the same as in the ring: biggest ID wins. Assuming the network is strongly-connected is essential: otherwise biggest-ID node might not be able to talk to anybody. Diameter bound can be dropped with effort but allows for a simple flooding algorithm as in the ring.

Basic flooding algorithm: state is (maxID, count), message function is send(q, i) = q.maxID if count < diameter and send(q, i) = null otherwise, update function is update(q, msgs) = (max(q.maxID, max(msgs)), count + 1.

- Terminates after exactly diameter rounds.

- Message complexity is exactly |E| * diameter.

Proof of correctness: by induction on round number, show that qi.count = round number and that qi.maxID = max[all u such that d(u,i) ≤ round number] u.ID.

- Optimized flooding algorithm: as in basic algorithm, but don't send messages unless q.maxID has changed. This requires adding sendMsg field to state.

Proof of correctness: show that state of each node at round r except sendMsg is the same in both algorithms. Requires additional invariant that when uv is in E and u.maxID > v.maxID, then u.sendMsg is true.

What if we don't know the diameter bound? Then we need much more machinery. Simplest algorithm is to have each node initiate a DistributedBreadthFirstSearch algorithm but give up if it sees a larger ID than its own.

3. Asynchronous leader election in rings

See also LynchBook §15.1. We'll use AsynchronousMessagePassing model based on IOAutomata.

Assumptions:

Reliable FIFO channels, modeled as universal reliable FIFO channel automaton from AsynchronousMessagePassing.

Processes have identical code (modulo names of actions—IOAutomata model requires that distinct automata have distinct output actions, so we have to embed some sort of position identifier into the action names) but start with distinct initial states that include a unique ID from some totally-ordered set.

- A process waits at most l time before issuing a send and a channel waits at most d time before issuing a recv.

Requirements: exactly one process eventually executes a leader output action. (With some tinkering we can get non-leaders to announce non-leader, but we'll keep things simple.)

Actions for the leader-election-in-a-unidirectional-ring system:

send(i,i+1,m) output of pi; note i+1 wraps around mod N.

recv(i,i+1,m) input of pi+1.

leader(i) output of pi.

3.1. A simple algorithm for a unidirectional ring

Here's the code for a simplified version of LCR for an asynchronous system. Note that this is even further stripped down than the AsynchLCR algorithm of LynchBook §15.1.1, since we don't bother building a queue of outgoing IDs, but instead send the largest ID seen so far.

Here is the code for pi:

- states

- (myid, maxid, unsent, status) initially (myid, myid, true, waiting)

- transitions

- send(i,i+1,m)

- precondition

- m = maxid and unsent = true

- effect

- unsent := false

- recv(i,i+1,m)

- effect

if m > maxid: maxid := m, unsent = true

- if m = myid and status = waiting: status := winning

if m < maxid: no effect

- leader(i)

- precondition

- status = winning effect: status = won

- tasks

- all output actions in one task

The system is the composition of N of these pi together with N universal reliable FIFO channels to connect them; we will refer to the channel from i to i+1 as channel[i]. We now wish to show that it solves leader election assuming all initial IDs are distinct. The intuition is the same as for the original synchronous version of LCR: the max ID happily cruises around the ring until it returns to its source—which then declares itself leader—while all other ID's are eaten.

3.1.1. Correctness proof

The simplest way to express this intuition is by writing some invariants. Below, we let max be the maximum ID and imax be such that myidimax = max.

- Max-spread invariant

There is a process i such that maxidj = max for all j in [imax,i], maxidj < max for all j not in [imax,i], and maxid does not appear in channel[j].queue for j not in [imax,i]. Furthermore, at least one of the following holds:

unsenti = true.

- channel[i].queue contains max.

statusimax <> waiting.

- Eaten-message invariant

If j is in the range [imax,i) then maxidj <> i and channel[j].queue does not contain i.

It is not hard to see that both invariants hold in the initial state (for the max-spread invariant we are looking at case (1) with i=imax). To show that both invariants continue to hold requires a painful and exhaustive case analysis that we will omit here.

That nobody but imax can declare itself leader follows from the eaten-message invariant together with the further observation that statusi = waiting until recv(i-1,i,idi) occurs, which is forbidden by the invariant when i <> imax.

3.1.2. Termination

To show termination, observe that if case (1) of the max-spread invariant holds for some i, eventually i must issue send(i,i+1,max). At this point case (2) holds, and eventually channel[i] must issue recv(i,i+1,max) (possibly after delivering some finite number of messages that were clogging the queue when send(i,i+1,max) occurred). This leads either back to case (1) with i increased by 1, or to case (3), depending on whether max was delivered to some i+1 <> imax or to imax. If case (3) occurs, then we must start with statusimax = winning and it's simply a matter of time before leader(imax) is issued.

3.1.3. Time analysis

For a time analysis, we can repeat the termination argument with explicit delay bounds. The time between case (1) for i and case (2) for i is at most l, where l is the bound on the time between enabling a send action and executing it. The time between case (2) for i and case (1) for i+1 is at most nd, where d is the maximum channel delay, since there are at most N messages clogging up the channel[i] queue (proving this rigorously requires yet another invariant that each id appears either in a single message or as maxid of a single process with unsent = true). Altogether we must wait at most nl + N2d time in the worst case.

However, this analysis gives up too much in assuming that every channel will be full of junk. In fact, we can argue that send(i+r,i+r+1,idi) occurs no later than time r(l+d)+l (if it occurs at all) and recv(i+r,i+r+1,idi) occurs no later than time (r+1)(l+d). The proof is by induction on r: for r = 0, send(i,i,idi) occurs no later than time l, since l is the upper bound on i's task, and if send(i,i,idi) occurs it must be the first message sent by i, so recv(i,i+1,idi) occurs no later than time l+d. For larger r, we have that recv(i+r-1,i+r,idi) occurs no later than time r(l+d), so the next send from i+r either discards idi or sends idi at time no later than r(l+d)+l. Similarly once send(i+r,i+r+1,idi) occurs, we have that any earlier value is delivered by time r(l+d), so idi if still present at this time must be the first in the queue, and so is delivered no later than r(l+d)+l+d = (r+1)(l+d).

3.1.4. Message complexity

Message complexity is similar to LCR, since we can construct an execution that is effectively synchronous and thus works identically to LCR. This gives a worst-case message complexity of O(N2). We can do better.

3.2. Peterson's algorithm for the unidirectional ring

See LynchBook §15.1.3. This gets O(N lg N) message complexity in all executions.

The basic idea (2-way communication version): Start with N candidate leaders. In each of at most lg N asynchronous phases, each candidate probes its nearest neighbors to the left and right; if its ID is larger than the IDs of both neighbors, it survives to the next phase. Non-candidates act as relays passing messages between candidates. As in Hirschberg and Sinclair, the probing operations in each phase take O(N) messages, and at least half of the candidates drop out in each phase. The last surviving candidate wins when it finds that it's its own neighbor.

To make this work in a 1-way ring, we have to simulate 2-way communication by moving the candidates clockwise around the ring to catch up with their unsendable counterclockwise messages. Peterson's algorithm does this with a two-hop approach that is inspired by the 2-way case above; in each phase k, a candidate effectively moves two positions to the right, allowing it to look at the ids of three phase-k candidates before deciding to continue in phase k+1 or not. Here is a very high-level description; it assumes that we can buffer and ignore incoming messages from the later phases until we get to the right phase, and that we can execute sends immediately upon receiving messages. Doing this formally in terms of I/O automata means that we have to build explicit internal buffers into our processes, which we can easily do but won't do here (see LynchBook pp 483-484 to see how to do this the right way.)

Candidate algorithm:

phase := 0

current := myid

while true do

send probe(phase, current)

wait for probe(phase, someid)

uid2 := someid

send probe(phase, someid)

wait for probe(phase, someid)

uid3 := someid

if uid2 = current:

I am the leader!

else if uid2 > current and uid2 > uid3:

current := uid2

phase := phase + 1

else

switch to relay algorithm

Relay algorithm:

upon receiving probe(somephase, someid):

send probe(somephase, someid)Note: the phase arguments in the probe messages are useless if one has FIFO channels, which is why LynchBook doesn't use them. Note also that the algorithm does not elect the process with the highest ID, but the process that is incubating the sole surviving candidate in the last phase.

Proof of correctness is essentially the same as for the 2-way algorithm. For any pair of adjacent candidates, at most one of their current IDs survives to the next phase. So we get a sole survivor after lg N phases. Each process sends or relays at most 2 messages per phases, so we get at most 2 N lg N total messages.

3.3. A simple randomized O(N lg N)-message algorithm

Run LCR where each id is constructed by prepending a long random bit-string to the real id. This gives uniqueness (since the real id's act as tie-breakers) and something very close to a random permutation on the constructed id's. When we have unique random id's, a simple argument shows that the i-th largest id only propagates an expected N/i hops, giving a total of O(N HN) = O(N log N) hops. Unique random id's occur with high probability provided the range of the random sequence is >> N2.

The downside of this algorithm compared to e.g. Peterson's is that knowledge of N is required to pick random id's from a large enough range. It also has higher bit complexity since Peterson's algorithm is sending only IDs (in the official version) without any random padding.

3.4. Lower bound on message complexity

Short version: reduce to the synchronous case and get Omega(N log N). Longer version: avoid Ramsey theory by exploiting asynchrony, which gives the adversary substantially more power. See LynchBook §15.1.4 if you are interested—I don't think we will do this one in class.