Contents

1. Relations, digraphs, and matrices

A binary relation from a set A to a set B is a subset of A×B. In general, an n-ary relation on sets A1, A2, ..., An is a subset of A1×A2×...×An. We will mostly be interested in binary relations, although n-ary relations are important in databases; unless otherwise specified, a relation will be a binary relation. A relation from A to A is called a relation on A; many of the interesting classes of relations we will consider are of this form. Some simple examples are the relations =, <, and ≤ on the integers.

You may recall that functions are a special case of relations (see Functions), but most of the relations we will consider now will not be functions.

Binary relations are often written in infix notation: instead of writing (x,y)∈R, we write xRy. This should be pretty familiar for standard relations like < but might look a little odd at first for relations named with capital letters.

1.1. Directed graphs

Relations are one of several structures over pairs of objects. Another such structure is a directed graph, consisting of a set of vertices V and a set of edges E, where each edge E has an initial vertex init(e) and a terminal vertex term(E). A simple directed graph has no "parallel edges": there are no edges e1 and e2 with init(e1) = init(e2) and term(e1) = term(e2).

If we don't care about the labels of the edges, a simple directed graph can be described by giving E as a subset of V×V; this gives a one-to-one correspondence between relations on a set V and (simple) directed graphs. For relations from A to B, we get a bipartite directed graph, where all edges go from vertices in A to vertices in B.

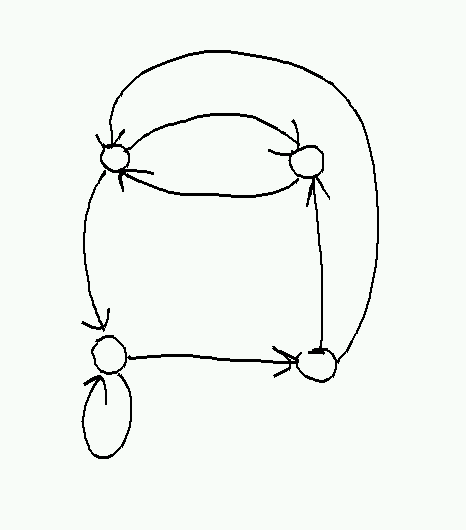

Directed graphs are drawn using a dot for each vertex and an arrow for each edge, like so:

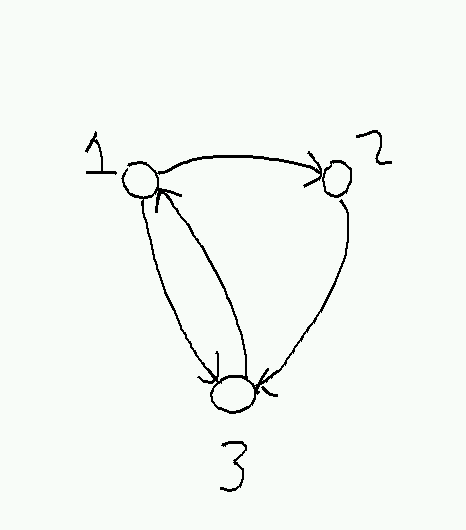

This also gives a way to draw relations. For example, the relation on { 1, 2, 3 } given by { (1,2), (1,3), (2,3), (3,1) } would look like this:

1.2. Matrices

A matrix is a two-dimensional analog of a sequence: in full generality, it is a function A:S×T→U, where S and T are the index sets of the matrix (typically { 1..n } and {1..m} for some n and m). As with sequences, we write Aij for A(i,j). Matrices are typically drawn inside square brackets like this:

![\[

A =

\left[

\begin{array}{rrrr}

0 & 1 & 1 & 0 \\

2 & 1 & 0 & 0 \\

1 & 0 & 0 & -1

\end{array}

\right].

\] \[

A =

\left[

\begin{array}{rrrr}

0 & 1 & 1 & 0 \\

2 & 1 & 0 & 0 \\

1 & 0 & 0 & -1

\end{array}

\right].

\]](/pinewiki/Relations?action=AttachFile&do=get&target=latex_4769f5af28d15413bc2ffd5e070d5f396d07eac7_p1.png)

The first index of an entry gives the row it appears in and the second one the column, so in this example A1 2 = 2 and A3 4 = -1. The dimensions of a matrix are the numbers of rows and columns; in the example, A is a 3×4 (pronounced "3 by 4") matrix.

Matrices are used heavily in LinearAlgebra, but for the moment we will use them to represent relations from { 1..n } to { 1..m }, by setting Aij = 0 if (i,j) is not in the relation and Aij = 1 if (i,j) is. So for example, the relation on { 1..3 } given by { (i,j) | i < j } would appear in matrix form as

![\[

\left[

\begin{array}{rrr}

0 & 1 & 1 \\

0 & 0 & 1 \\

0 & 0 & 0 \\

\end{array}

\right].

\] \[

\left[

\begin{array}{rrr}

0 & 1 & 1 \\

0 & 0 & 1 \\

0 & 0 & 0 \\

\end{array}

\right].

\]](/pinewiki/Relations?action=AttachFile&do=get&target=latex_1ae20543d466d707fe8cb53875de750e0dcdeb24_p1.png)

2. Operations on relations

2.1. Composition

Just like functions, relations can be composed: given R:A→B and S:B→C we define (S∘R):A→C by the rule (x,z)∈(S∘R) if and only if there exists some y∈B such that (x,y)∈R and (y,z)∈S. (In infix notation: x(S∘R)z ⇔ ∃y xRy ∧ ySz.) It's not hard to see that ordinary function composition is just a special case of relation composition.

In matrix terms, composition acts like matrix multiplication, where we replace scalar multiplication with AND and scalar addition with OR: (S∘R)ij = ∨k (Rik∧Skj). Note that if we use the convention that Rij = 1 if iRj the order of the product is reversed from the order of composition.

For relations on a single set, we can iterate composition: Rn is defined by R0 = (=) and Rn+1 = R∘Rn. (This also works for functions.) In directed graph terms, xRny iff there is a path of exactly n edges from x to y (possibly using the same edge more than once).

2.2. Inverses

Relations also have inverses: xR-1y ⇔ yRx. Unlike functions, every relation has an inverse.

3. Classifying relations

Certain properties of relations on a set are important enough to be given names that you should remember.

- Reflexive

A relation R on a set A is reflexive if (a,a) is in R for all a in A. The relations = and ≤ are both reflexive. The equality relation is in a sense particularly reflexive: a relation R is reflexive if and only if it is a superset of =.

- Symmetric

A relation R is symmetric if (a,b) is in R whenever (b,a) is. Equality is symmetric, but ≤ is not. Another way to state symmetry is that R = R-1.

- Antisymmetric

A relation R is antisymmetric if the only way that both (a,b) and (b,a) can be in R is if a=b. (More formally: aRb ∧ bRa ⇒ a=b.) The "less than" relation < is antisymmetric: if a is less than b, b is not less than a, so the premise of the definition is never satisfied. The "less than or equal to" relation ≤ is also antisymmetric; here it is possible for a≤b and b≤a to both hold, but only if a=b. The set-theoretic statement is R is symmetric iff R∩R-1⊆(=). This is probably not as useful as the simple definition.

- Transitive

A relation R is transitive if (a,b) in R and (b,c) in R implies (a,c) in R. The relations =, <, and ≤ are all transitive. The relation { (x,x+1) | x in ℕ } is not. The set-theoretic form is that R is transitive if R²⊆R, or in general if Rn⊆R for all n > 0.

4. Equivalence relations

An equivalence relation is a relation that is reflexive, symmetric, and transitive. Equality is the model of equivalence relations, but some other examples are:

- Equality mod m: The relation x = y (mod m) that holds when x and y have the same remainder when divided by m is an equivalence relation.

Equality after applying a function: Let f:A→B be any function, and define x ~f y if f(x) = f(y). Then ~f is an equivalence relation.

Membership in the same block of a partition: Let A be the union of a collection of sets Ai where the Ai are all disjoint. The set { Ai } is called a partition of A and each individual set Ai is called a block of the partition. Let x ~ y if x and y appear in the same block Ai for some i. Then ~ is an equivalence relation.

Directed graph isomorphism: Suppose that G=(V,E) and G'=(V',E') are directed graphs, and there exists a bijection f:V→V' such that (u,v) is in E if and only if (f(u), f(v)) is in E'. Then G and G' are said to be isomorphic (from Greek "same shape"). The relation G ≅ G' that holds when G and G' are isomorphic is easily seen to be reflexive (let f by the identity function), symmetric (replaced f by f-1), and transitive (compose f:G→G' and g:G'→G''); thus it is an equivalence relation.

Partitioning a plane: draw a curve in a plane (i.e., pick a continuous function f:[0,1]→R2). Let x~y if there is a curve from x to y (i.e., a curve g with g(0) = x and g(1) = y) that doesn't intersect the first curve. Then x~y is an equivalence relation on points in the plane excluding the curve itself. Proof: To show x~x, let g be the constant function g(t) = x. To show x~y ↔ y~x, consider some function g with g(0) = x and g(1) = y and let g'(t) = g(1-t). To show x~y and y~z ⇒ x~z, let g be a curve from x to y and g' a curve from y to z, and define a new curve (g+g') by (g+g')(t) = g(2t) when t ≤ 1/2 and (g+g')(t) = g'(2t-1) when t ≥ 1/2.

Any equivalence relation ~ on a set A gives rise to a set of equivalence classes, where the equivalence class of an element a is the set of all b such that a ~ b. Because of transitivity, the equivalence classes form a partition of the set A, usually written A/~ (prounounced "A slash ~" or sometimes "A modulo ~"). A member of a particular equivalence class is said to be a representative of that class. For example, the equivalence classes of equality mod m are the sets Ai = { i + km } with representatives { 0, 1, 2, 3, ..., m - 1 }. A more complicated case are the equivalence classes of the plane partitioning example; here the equivalence classes are essentially the pieces we get after cutting out the curve f, and any point on a piece can act as a representative for that piece.

4.1. Why we like equivalence relations

Equivalence relations are the way that mathematicians say "I don't care." If you don't care about which integer you've got except for its remainder when divided by m, then you define two integers that don't differ in any way that you care about to be equivalent and work in ℤ/~ (ℤ/(= mod m) in this case). This turns out to be incredibly useful for defining new kinds of things: for example, we can define multisets (sets where elements can appear more than once) by starting with sequences, declaring x~y if there is a permutation of x that reorders it into y, and then defining a multiset as an equivalence class with respect to this relation.

This can also be used informally: "I've always thought that brocolli, spinach, and kale are in the same equivalence class."1

5. Partial orders

A partial order is a relation ≤ that is reflexive and transitive, and antisymmetric: the last means that if x ≤ y and y ≤ x, then x = y. A set S together with a partial order ≤ is called a partially ordered set or poset. A strict partial order is a relation < that is irreflexive and transitive (which implies antisymmetry as well). Any partial order ≤ can be converted into a strict partial order and vice versa by deleting/including the pairs (x,x) for all x.

Examples:

- (ℕ, ≤) is a poset.

(ℕ, ≥) is also a poset. In general, if R is a partial order, then R-1 is also a partial order.

The divisibility relation a|b on natural numbers, where a|b if and only if there is some k in ℕ such that b = ak, is reflexive (let k = 1), antisymmetric (if a|b then a≤b so if a|b and b|c then a≤b and b≤c implying a=b) and transitive (if b = ak and c = bk' then c = akk'). Thus it is a partial order.

Let (A,≤A) and (B,≤B) be posets. Then the relation ≤ on A×B defined by (a,b) ≤ (a',b') iff a ≤ a' and b ≤ b' is a partial order. The poset (A×B,≤) defined in this way is called the product poset of A and B.

Again let (A,≤A) and (B,≤B) be posets. The relation ≤ on A×B defined by (a,b) ≤ (a',b') if a < a' or a = a' and b ≤ b' is called lexicographic order on A×B and is a partial order. The useful property of lexicographic order (lex order for short) is that if the original partial orders are total, so is the lex order: this is why dictionary-makers use it. This also gives a source of very difficult-to-visualize total orders, like lex order on ℝ×ℝ, which looks like the classic real number line where every point is replaced by an entire copy of the reals.

Let Σ be some alphabet and consider the set Σ* = Σ0∪Σ1∪Σ2... of all finite words drawn from Σ. Given two words x and y, let x≤y if x is a prefix of y, i.e. if there is some word z such that xz=y. Then (Σ*,≤) is a poset.

Using the same set Σ*, let x⊑y if x is a subsequence of y, i.e., if there is a sequence of increasing positions i1, i2, ... ik such that xj = yij. (For example, bd⊑abcde.) Then (Σ*,⊑) is a poset.

There are also some common relations that are not partial orders or strict partial orders but come close. For example, the element-of relation (∈) is irreflexive and antisymmetric (this ultimately follows from the Axiom of Foundation) but not transitive; if x∈y and y∈z we do not generally expect x∈z. The "is at least as rich as" relation is reflexive and transitive but not antisymmetric: if you and I have a net worth of 0, we are each as rich as the other, and yet we are not the same person. Relations that are reflexive and transitive (but not necessarily antisymmetric) are called quasiorders or preorders and can be turned into partial orders by replacing each set of elements for which x≤y and y≤x for all elements in the set by a single element. (As far as I know there is no standard term for relations that are irreflexive and antisymmetric but not necessarily transitive.)

5.1. Drawing partial orders

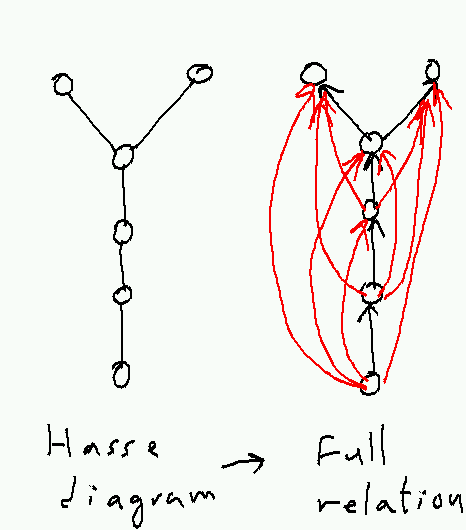

Since partial orders are relations, we can draw them as directed graphs. But for many partial orders, this produces a graph with a lot of edges whose existence is implied by transitivity, and it can be easier to see what is going on if we leave the extra edges out. If we go further and line the elements up so that x is lower than y when x < y, we get a Hasse diagram: a representation of a partially ordered set as a graph where there is an edge from x to y if x < y and there is no z such that x < z < y. (There is special terminology for this situation: such an x is called a predecessor or sometimes immediate predecessor of y; y in turn is the successor or sometimes immediate successor of x.)

Here is an example of a Hasse diagram on a partially-ordered set with 5 elements. Observe that the full relation (depicted on the right) is much more tangled than the Hasse diagram. The red edges are those whose existence is implied by transitivity.

5.2. Comparability

In a partial order, two elements x and y are comparable if x≤y or y≤x. Elements that are not comparable are called incomparable. In a Hasse diagram, comparable elements are connected by a path that only goes up. For example, in the partial order whose Hasse diagram was given earlier, all elements are comparable to each other except the two elements at the top.

5.3. Minimal and maximal elements

If for some x there is no y < x, x is minimal. A partial order may have any number of minimal elements: e.g. the integers have no minimal element, the naturals have one minimal element, a set with k elements none of which are comparable to each other has k minimal elements. If an element x is not only minimal but also satisfies x < y for all y not equal to x, then x is a minimum. A partial order may have at most one minimum (e.g. 0 in the naturals), but may have no minimum, either because it has no minimal elements to begin with or because it has more than one.

The corresponding terms for elements that are not less than any other element or greater than all other elements are maximal and maximum, respectively.

Here is an example of the difference between a maximal and a maximum element: consider the family of all subsets of ℕ with at most three elements, ordered by ⊆. Then {0,1,2} (or any other three-element set) is a maximal element of this family (it's not a subset of any larger set), but it's not a maximum because it's not a superset of e.g. {3}.

5.4. Total orders

If any two elements of a partial order are comparable (i.e., if at least one of x≤y or y≤x holds for all x,y), then the partial order is a total order. Total orders include many of the familiar orders on the naturals, the reals, etc.

Any partial order (S,<) can be extended to a total order (generally more than one, if the partial order is not total itself). The essential idea is to break ties between incomparable elements in a way that is consistent with maintaining transitivity. For finite partial orders this can be done by starting with some minimal element and declaring it to be the minimum, and then repeating with the elements that are left. (This process is called a TopologicalSort, and there are fast algorithms for it.)

For infinite partial orders the situation is more complicated. The intuition is that we can always pick some pair of incomparable elements and declare one less than the other, fill in any other relations implied by transitivity, and repeat. Unfortunately this process may take infinitely long, so we have to argue that it converges in the limit to a genuine total order using a tool called Zorn's lemma, which itself is a theorem about partial orders.2

5.5. Well orders

A well order is a particularly restricted kind of total order. A partial order is a well order if it is a total order and, for every nonempty subset S, S contains some minimum element x: one that is less than or equal to all elements of S. An example of a well order is the usual order on ℕ.3

An equivalent definition is that a total order is a well order if it contains no infinite descending chains, which are infinite sequences x1, x2, ... with x1 > x2 > x3 > ... . To show that this is implied by every set having a least element, suppose that a given total order has the least-element property. Then given a would-be infinite descending chain x1, x2, ..., let xi be its least element. But then xi is not greater than xi+1. For the converse, suppose that some set S does not have a least element. Then we can construct an infinite descending chain by choosing any x1 in S, then for each xi+1 choose some element less than the smallest of x1...xi. (Note: iterating this process forever requires using the Axiom of Choice. Bizarre things happen in order theory without the Axiom of Choice.)

The useful property of well-orders is that we can do induction on them. If it is the case that (a) P(m) holds, where m is the smallest element in some set S, and (b) P(x') for all x < x' implies P(x); then P(x) holds for all x in S. The proof is that if P(x) doesn't hold, there is a least element y in S for which it doesn't hold. But this contradicts (a) if y=m and (b) otherwise. This assumes, of course, that the < relation is a well-order, because otherwise m may not exist. (For example, we can't do induction on the integers because there is no number negative enough to be the base case).

It is possible in an infinite set to have a well-ordering in which some elements do not have predecessors. For example, the order on S = ℕ ∪ { ω } defined by x ≤ y if either (a) x and y are both in ℕ and x ≤ y by the usual ordering on ℕ or (b) y = ω is a total order that is also a well order, but ω has no immediate predecessor. In this case we can still do induction proofs, but since ω is not n+1 for any n we need a special case in the proof to handle it. For a more complicated example, the set ω+ω = { 0, 1, 2, ...; ω, ω+1, ω+2, ... } is also well-ordered, so we can do induction on it if we can show P(0), P(x) ⇒ P(x+1), and P(ω) (possibly using P(x) for all x < ω in the last case).

5.6. Lattices

A lattice is a partial order in which (a) each pair of elements x and y has a unique greatest lower bound or meet, written x∧y, with the property that (x∧y)≤x, (x∧y)≤y, and z≤(x∧y) for any z with z≤x and z≤y; and (b) each pair of elements x and y has a unique least upper bound or join, written x∨y, with the property that (x∨y)≥x, (x∨y)≥y, and z≥(x∨y) for any z with z≥x and z≥y.

Examples of lattices are any total order (x∧y is min(x,y), x∨y is max(x,y)), the subsets of a fixed set ordered by inclusion (x∧y is x∩y, x∨y is x∪y), and the divisibility relation on the positive integers (x∧y is the greatest common divisor, x∨y is the least common multiple—see NumberTheory). Products of lattices with the product order4 are also lattices: (x₁,x₂)∧(y₁,y₂) = (x₁∧₁y₁,x₂∧y₂) and (x₁,x₂)∨(y₁,y₂) = (x₁∨₁y₁,x₂∨y₂).

6. Closure

In general, the closure of some mathematical object with respect to a given property is the smallest larger object that has the property. Usually "smaller" and "larger" are taken to mean subset or superset, so we are really looking at the intersection of all larger objects with the property. Such a closure always exists if the property is preserved by intersection (i.e., if (∀i P(Si)) ⇒ P(∩Si)) and every object has at least one larger object with the property.

This rather abstract definition can be made more explicit for certain kinds of closures of relations. The reflexive closure of a relation R (whose domain and codomain are equal) is the smallest super-relation of R that is reflexive; it is obtained by adding (x,x) to R for all x in R's domain. The symmetric closure is the smallest symmetric super-relation of R; it is obtained by adding (y,x) to R whenever (x,y) is in R, or equivalently by taking R∪R-1. The transitive closure is obtained by adding (x,z) to R whenever (x,y) and (y,z) are both in R for some y—and continuing to do so until no new pairs of this form remain. The transitive closure can also be computed as R1∪R2∪R3...; for reflexive R, this is equal to R0∪R1∪R2..., which is often written as R*.

In digraph terms, the reflexive closure adds self-loops to all nodes, the symmetric closure adds a reverse edge for each edge, and the transitive closure adds an edge for each directed path through the graph. One can also take the closure with respect to multiple properties, such as the reflexive symmetric transitive closure of R which will be the smallest equivalence relation in which any elements that are related by R are equivalent.

Closures provide a way of turning things that aren't equivalence relations or partial orders into equivalence relations and partial orders. For equivalence relations this is easy: take the reflexive symmetric transitive closure, and you get a reflexive symmetric transitive relation. For partial orders it's trickier: antisymmetry isn't a closure property (even though it's preserved by intersection, a non-antisymmetric R can't be made anti-symmetric by adding more pairs). Given a relation R on some set S, the best we can do is take the reflexive transitive closure R* and hope that it's antisymmetric. If it is, we are done. If it isn't, we can observe that the relation ~ defined by x~y if xR*y and yR*x is an equivalence relation (proof: x~x because R* is reflexive, x~y ⇒ y~x from the symmetry of the definition, and x~y ∧ y~z ⇒ x~z because transitivity of R* gives xR*y ∧ yR*z ⇒ xR*z and yR*x and zR*y ⇒ zR*x). So we can take the quotient S/~, which smashes all the equivalence classes of ~ into single points, define a quotient relation R*/~ in the obvious way, and this quotient relation will be a partial order.

6.1. Examples

Let R be the relation on subsets of ℕ given by xRy if there exists some n∉x such that y = x∪{n}. The transitive closure of R is the proper subset relation ⊂, where x⊂y if x⊆y but x≠y; the reflexive transitive closure R* of R is just the ordinary subset relation ⊆. The reflexive symmetric transitive closure of R is the complete relation; given any two sets x and y, we can get from x to ∅ via (R*)-1 and then to y via R*. So in this case the reflexive symmetric transitive closure is not very interesting.

Let R be the relation on ℕ given by xRy if x=2y. Then the reflexive transitive closure R* is the relation given by xR*y if x=2ny for some n∈ℕ, and the reflexive symmetric transitive closure is the relation given by x~y if x=2ny or y=2nx for some n∈ℕ. For this R not all elements of the underlying set are equivalent in the reflexive symmetric transitive closure; instead, we get a separate equivalence class { k, 2k, 4k, 8k, ... } for each odd number k.

Curious fact: two of these unpopular vegetables are in fact cultivars of the same species Brassica oleracea of cabbage. (1)

You don't really need to know about Zorn's Lemma, but if you are curious, Zorn's Lemma says that if (S,<) is any poset, and every totally-ordered subset S' of S has an upper bound x (i.e. there exists some x such that y ≤ x for all y in S'), then S has a maximal element. Applying this to partial orders, let R be some partial order on a set A, and let S = { R' | R' is a partial order on A with R subset of R' }, ordered by subset. Now given any chain of partial orders R1, R2, ..., where each partial order extends R and is a subset of the next, their union is also a partial order (this requires proof) and any Ri is ≤ the union. So S has a maximal element R*. If R* is not a total order, we can find a bigger element R** by breaking a tie between some pair of incomparable elements, which would contradict maximality. So R* is the total order we are looking for. (2)

Proof: We can prove that any nonempty S⊆ℕ has a minimum in a slightly roundabout way by induction. The induction hypothesis is that if S contains some element y less than or equal to x, then S has a minimum element. The base case is when x=0; here x is the minimum. Suppose now that the claim holds for x. Suppose also that S contains some element y ≤ x+1. If there is some y ≤ x, then S has a minimal element by the induction hypothesis. The alternative is that there is no y in S such that y ≤ x, but there is a y in S with y ≤ x+1. This y must be equal to x+1, and it itself is the minimal element. (3)

The product of two lattices with lexicographic order is not always a lattice. For example, consider the lex-ordered product of ({0,1}, ⊆) with (ℕ, ≤). For the elements x=({0}, 0) and y=({1},0) we have that z is lower bound on x and y iff z is of the form (∅, k) for some k∈ℕ. But there is no greatest lower bound for x and y because we can always choose a bigger k. (4)