The shortest path problem is to find a path in a graph with given edge weights that has the minimum total weight. Typically the graph is directed, so that the weight wuv of an edge uv may differ from the weight wvu of vu; in the case of an undirected graph, we can always turn it into a directed graph by replacing each undirected edge with two directed edges with the same weight that go in opposite directions. We will use the terms weight and length interchangeably, and use distance for the minimum total path weight between two nodes, even when the weights don't make sense as lengths (for example, when some are negative).

There are two main variants to the problem:

The single-source shortest path problem is to compute the distance from some source node s to every other node in the graph. This variant includes the case where what we really want is just the distance from s to some target node t.

The all-pairs shortest path problem is to compute the distance between every pair of nodes in the graph. This can be solved by running a single-source algorithm once for each starting vertex, but it can be solved more efficiently by combining the work for different starting vertices.

There are also two different assumptions about the edge weights that can radically change the nature of the problem:

All edge weights are non-negative. This is the natural case where edge weights represent distances, and allows a fast greedy solution for the single-source case.

Edge weights are arbitrary. This case typically arises when the edge weights represent the net cost of traversing some edge, which may be negative for profitable edges. Now greedy solutions fail; even though it may be very expensive to get to some distant node u, if there is a good enough edge leaving u you can make up all the costs by taking it. Shortest paths with negative edge weights are typically found by algorithms using techniques related to DynamicProgramming.

1. Single-source shortest paths

In the single-source shortest path problem, we want to compute the distance δ(s,t) from a single source node s to every target node t. (As a side effect, we might like to find the actual shortest path, but usually this can be done easily while we are computing the distances.) There are many algorithms for solving this problem, but most are based on the same technique, known as relaxation.

1.1. Relaxation

In general, a relaxation of an optimization problem is a new problem that replaces equality constraints in the original problem, like

δ(s,t) = minu δ(s,u) + wut

with an inequality constraint, like

d(s,t) ≥ minu d(s,u) + wut.

When we do this kind of replacement, we are also replacing the exact distances δ(s,t) with upper bounds d(s,t), and guaranteeing that d(s,t) is always greater than the correct answer δ(s,t).

The reason for relaxing a problem is that we can start off with very high upper bounds and lower them incrementally until they settle on the correct answer. For shortest paths this is done by setting d(s,t) initially to zero when t=s and +∞ when t≠s (this choice doesn't require looking at the graph). We then proceed to lower the d(s,t) bounds by a sequence of edge relaxations (a different use of the same term), where relaxing an edge uv sets the upper bound on the distance to v to the minimum of the old upper bound and the upper bound that we get by looking at a path through u, i.e.

d'(s,v) := min(d(s,v), d(s,u) + wuv).

It is easy to see that if d(s,v) ≥ δ(s,v) and d(s,u) ≥ δ(s,u), then it will also be the case that d'(s,v) ≥ δ(s,v).

What is less obvious is that performing an edge relaxation on every edge in some shortest s-t path in order starting from the initial state of the d array will set d(s,t) to exactly δ(s,t), even if other relaxation operations occur in between. The proof is by induction on the number of edges in the path. With zero edges, d(s,t) = δ(s,t) = 0. With k+1 edges, the induction hypothesis says that d(s,u) = δ(s,u) after the first k relaxations, where u is the second-to-last vertex in the path. But then the last relaxation sets d(s,t) ≤ δ(s,u) + wut, which is the length of the shortest path and thus equals δ(s,t).

We mentioned earlier that it is possible to compute the actual shortest paths as a side-effect of computing the distances. This is done using relaxation by keeping track of a previous-vertex pointer p[v] for each vertex, so that the shortest path is found by following all the previous-vertex pointers and reversing the sequence. Initially, p[v] = NULL for all v; when relaxing an edge uv, it is set to u just in case d(s,u) + wuv is less than the previously computed distance d(s,v). So in addition to getting the correct distances by relaxing all edges on the shortest path in order, we also find the shortest path.

This raises the issue of how to relax all the edges on the shortest path in order if we don't know what the shortest path is. There are two ways to do this, depending on whether the graph contains negative-weight edges.

1.2. Dijkstra's algorithm

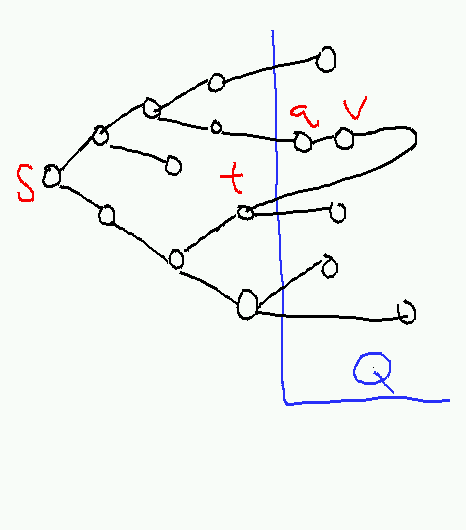

If the graph contains no negative-weight edges, we can apply the GreedyMethod, relaxing at each step all the outgoing edges from the apparently closest vertex v that hasn't been processed yet; if this is in fact the closest vertex, we process all vertices in order of increasing distance and thus relax the edges of each shortest path in order. This method gives Dijkstra's algorithm for single-source shortest paths, one of the best and simplest algorithms for the problem. It requires a priority queue Q that provides an EXTRACT-MIN operation that deletes and returns the element v with smallest key, in this case the upper bound d(s,v) on the distance.

Dijkstra(G,w,s):

Set d[s] = 0 and set d[v] = +infinity for all v != s.

Add all the vertices to Q.

while Q is not empty:

u = EXTRACT-MIN(Q)

for each each uv:

d[v] = min(d[v], d[u] + w(u,v))

return dThe running time of Dijkstra's algorithm depends on the implementation of Q. The simplest implementation is just to keep around an array of all unprocessed vertices, and to carry out EXTRACT-MIN by performing a linear-time scan for one with the smallest d[u]. This gives a cost to EXTRACT-MIN of O(V), which is paid V times (once per vertex), for a total of O(V2) time. The additional overhead of the algorithm takes O(V) time, except for the loop over outgoing edges from u, all of whose iterations together take O(E) time. So the total cost is O(V2 + E) = O(V2). This can be improved for sparse graphs to O((V+E) log V) using a heap to represent Q (the extra log V on the E comes from the cost of moving elements within the heap when their distances drop), and it can be improved further to O(V log V + E) time using a FibonacciHeap.

Why does Dijkstra's algorithm work? Assuming there are no negative edge weights, there is a simple proof that d[u] = δ(s,u) for any v that leaves the priority queue. The proof is by induction on the number of vertices that have left the queue, and requires a rather complicated induction hypothesis, which is that after each pass through the outer loop:

If u is any vertex not in the queue, then d[u] = δ(s,u).

- If u is any vertex not in the queue, and v is any vertex in the queue, then δ(s,u) ≤ δ(s,v)

If u is any vertex not in the queue, and v is any vertex in the queue, then d[v] ≤ δ(s,u) + wuv (where wuv is taken to be +∞ if uv is not an edge in the graph).

This invariant looks ugly but what it says is actually pretty simple: after i steps, we have extracted the i closest vertices and correctly computed there distance, and for any other vertex v, d[v] is at most the length of the shortest path that consists only of non-queue vertices except for v. If the first two parts of the invariant hold, the third is immediate from the relaxation step in the algorithm. So we concentrate on proving the first two parts.

The base case is obtained by consider the state where s is the only vertex not in the queue; we easily have d[s] = 0 = δ(s,s) ≤ δ(s,v) for any vertex v in the queue.

For later u, we'll assume that the invariant holds at the beginning of the loop, and show that it also holds at the end. For each v in the queue, d[v] is at most the length of the shortest s-v path that uses only vertices already processed. We'll show that the smallest d[v] is in fact equal to δ(s,v) and is no greater than δ(s,v') for any v' in the queue. Consider any vertex v that hasn't been processed yet. Let t be the last vertex before v on some shortest s-v path that uses only previously processed vertices. From the invariant we have that d[v] ≤ δ(s,t) + wtv. Now consider two cases:

- The s-t-v path is a shortest path. Then d[v] = δ(s,v)

The s-t-v path is not a shortest path. Then d[v] > δ(s,v) and there is some shorter s-v path whose last vertex before v is t'. But this shorter s-t'-v path can only exist if t' is still in the queue. If we let q be the first vertex in some shortest s-t'-v path that is still in the queue, the s-q part of the path is a shortest path that uses only non-queue vertices. So d[q] = δ(s,q) ≤ δ(s,v) < d[v].

Let u be returned by EXTRACT-MIN. If case 1 applies to u, part 1 of the invariant follows immediately. Case 2 cannot occur because in this case there would be some q with d[q] < d[u] and EXTRACT-MIN would have returned q instead. We have thus proved that part 1 continues to hold.

For part 2, consider the two cases for some v≠u. In case 1, δ(s,v) = d[v] ≥ d[u] = δ(s,u). In case 2, δ(s,v) ≥ δ(s,q) = d[q] ≥ d[u] = δ(s,u). Thus in either case δ(s,v) ≥ δ(s,u) and part 2 of the invariant holds.

Part 3 of the invariant is immediate from the code.

To complete the proof of correctness, observe that the first part of the induction hypothesis implies that all distances are correct when the queue is empty.

1.3. Bellman-Ford

What if the graph contains negative edges? Then Dijkstra's algorithm may fail in the usual pattern of misled GreedyAlgorithms: the very last vertex v processed may have spectacularly negative edges leading to other vertices that would greatly reduce their distances, but they have already been processed and it's too late to take advantage of this amazing fact (more specifically, it's not too late for the immediate successors of v, but it's too late for any other vertex reachable from such a successor that is not itself a successor of v).

But we can still find shortest paths using the technique of relaxing every edge on a shortest path in sequence. The Bellman-Ford algorithm does so under the assumption that there are no negative-weight cycles in the graph, in which case all shortest paths are simple---they contain no duplicate vertices---and thus have at most V-1 edges in them. If we relax every edge, we are guaranteed to get the first edge of every shortest path; relaxing every edge again gets the second edge; and repeating this operation V-1 gets all edges in order.

BellmanFord(G,s,w):

Set d[s] = 0 and set d[v] = +infinity for all v != s.

for i = 1 to V-1

for each each edge uv in G:

d[v] = min(d[v], d[u] + w(u,v))

return dThe running time of Bellman-Ford is O(VE), which is generally slower than even the simple O(V2) implementation of Dijkstra's algorithm; but it handles any edge weights, even negative ones.

What if a negative cycle exists? In this case, there may be no shortest paths; any short path that reaches a vertex on the cycle can be shortened further by taking a few extra loops around it. The Bellman-Ford algorithm can be used to detect such cycles by running the outer loop one more time---if d[v] drops for any v, then a negative cycle reachable from s exists. The converse is also true; intuitively, this is because further reductions in distance can only propagate around the negative cycle if there is some edge that can be relaxed further in each state. CormenEtAl Section 24.1 contains a real proof.

2. All-pairs shortest paths

There is a very simple DynamicProgramming algorithm known as Floyd-Warshall that computes the distance between all V2 pairs of vertices in Θ(V3) time. This is no faster than running Dijkstra's algorithm V times, but it works even if some of the edge weights are negative.

Like any other dynamic programming algorithm, Floyd-Warshall starts with a recursive decomposition of the shortest-path problem. The basic idea is to cut the path in half, by expanding d(i,j) as mink (d(i,k) + d(k,j)), but this requires considering n-2 intermediate vertices k and doesn't produce a smaller problem. There are a couple of ways to make the d(i,k) on the right-hand side "smaller" than the d(i,j) on the left-hand side---for example, we could add a third parameter that is the length of the path and insist that the subpaths on the right-hand side be half the length of the path on the left-hand side---but most of these approaches still require looking at Θ(n) intermediate vertices. The trick used by Floyd-Warshall is to make the third parameter be the largest vertex that can be used in the path. This allows us to consider only one new intermediate vertex each time we increase this limit.

Define d(i,j,k) as the length of the shortest i-j path that uses only vertices with indices less than or equal to k. Then

d(i,j,0) = wij, d(i,j,k) = min(d(i,j,k-1), d(i,k,k-1) + d(k,j,k-1)).

The reason this decomposition works (for any graph that does not contain a negative-weight cycle) is that every shortest i-j path with no vertex greater than k either includes k exactly once (the second case) or not at all. The nice thing about this decomposition is that we only have to consider two values in the minimum, so we can evaluate d(i,j,k) in O(1) time if we already have d(i,k,k-1) and d(k,j,k-1) in our table. The natural way to guarantee this is to build the table in order of increasing k. We assume that the input is given as an array of edge weights with +∞ for missing edges; the algorithm's speed is not improved by using an adjacency-list representation of the graph.

FloydWarshall(w):

// initialize first plane of table

for i = 1 to V do

for j = 1 to V do

d[i,j,0] = w[i,j]

// fill in the rest

for k = 1 to V do

for i = 1 to V do

for j = 1 to V do

d[i,j,k] = min(d[i,j,k-1], d[i,k,k-1] + d[k,j,k-1])

// pull out the distances where all vertices on the path are <= n

// (i.e. with no restrictions)

return d' where d'[i,j] = d[i,j,k]The running time of this algorithm is easily seen to be Θ(V3). As with Bellman-Ford, its output is guaranteed to be correct only if the graph does not contain a negative cycle; if the graph does contain a negative cycle, it can be detected by looking for vertices with d'[i,i] < 0.

3. Implementations

Below are C implementations of Bellman-Ford, Floyd-Warshall, and Dijkstra's algorithm (in a separate file). The Dijkstra's algorithm implementation uses the generic priority queue from the CS223/2005/Assignments/HW08 sample solutions. Both files use an extended version of the Graph structure from C/Graphs that supports weights.

Here are the support files:

Here is some test code and a Makefile:

And here are the actual implementations:

1 /* various algorithms for shortest paths */

2

3 #define SHORTEST_PATH_NULL_PARENT (-1)

4

5 /* Computes distance of each node from starting node */

6 /* and stores results in dist (length n, allocated by the caller) */

7 /* unreachable nodes get distance MAXINT */

8 /* If parent argument is non-null, also stores parent pointers in parent */

9 /* Allows negative-weight edges and runs in O(nm) time. */

10 /* returns 1 if there is a negative cycle, 0 otherwise */

11 int bellman_ford(Graph g, int source, int *dist, int *parent);

12

13 /* computes all-pairs shortest paths using Floyd-Warshall given */

14 /* an adjacency matrix */

15 /* answer is returned in the provided matrix! */

16 /* assumes matrix is n pointers to rows of n ints each */

17 void floyd_warshall(int n, int **matrix);

1 #include <stdlib.h>

2 #include <assert.h>

3 #include <values.h>

4

5 #include "graph.h"

6 #include "shortest_path.h"

7

8 /* data field for relax helper */

9 struct relax_data {

10 int improved;

11 int *dist;

12 int *parent;

13 };

14

15 static void

16 relax(Graph g, int source, int sink, int weight, void *data)

17 {

18 int len;

19 struct relax_data *d;

20

21 d = data;

22

23 if(d->dist[source] < MAXINT && weight < MAXINT) {

24 len = d->dist[source] + weight;

25

26 if(len < d->dist[sink]) {

27 d->dist[sink] = len;

28 if(d->parent) d->parent[sink] = source;

29 d->improved = 1;

30 }

31 }

32 }

33

34 /* returns 1 if there is a negative cycle */

35 int

36 bellman_ford(Graph g, int source, int *dist, int *parent)

37 {

38 int round;

39 int n;

40 int i;

41 struct relax_data d;

42

43 assert(dist);

44

45 d.dist = dist;

46 d.parent = parent;

47 d.improved = 1;

48

49 n = graph_vertex_count(g);

50

51 for(i = 0; i < n; i++) {

52 d.dist[i] = MAXINT;

53 if(d.parent) d.parent[i] = SHORTEST_PATH_NULL_PARENT;

54 }

55

56 d.dist[source] = 0;

57 d.parent[source] = source;

58

59 for(round = 0; d.improved && round < n; round++) {

60 d.improved = 0;

61

62 /* relax all edges */

63 for(i = 0; i < n; i++) {

64 graph_foreach_weighted(g, i, relax, &d);

65 }

66 }

67

68 return d.improved;

69 }

70

71 void

72 floyd_warshall(int n, int **d)

73 {

74 int i;

75 int j;

76 int k;

77 int newdist;

78

79 /* The algorithm:

80 *

81 * d(i, j, k) = min distance from i to j with all intermediates <= k

82 *

83 * d(i, j, k) = min(d(i, j, k-1), d(i, k, k-1) + d(k, j, k-1)

84 *

85 * We will allow shorter paths to sneak in to d(i, j, k) so that

86 * we don't have to store anything extra.

87 */

88

89 /* initial matrix is essentially d(:,:,-1) */

90 /* within body of outermost loop we compute d(:,:,k) */

91 for(k = 0; k < n; k++) {

92 for(i = 0; i < n; i++) {

93 /* skip if we can't get to k */

94 if(d[i][k] == MAXINT) continue;

95 for(j = 0; j < n; j++) {

96 /* skip if we can't get from k */

97 if(d[k][j] == MAXINT) continue;

98

99 /* else */

100 newdist = d[i][k] + d[k][j];

101 if(newdist < d[i][j]) {

102 d[i][j] = newdist;

103 }

104 }

105 }

106 }

107 }

1 #define DIJKSTRA_NULL_PARENT (-1)

2

3 /* Computes distance of each node from starting node */

4 /* and stores results in dist (length n, allocated by the caller) */

5 /* unreachable nodes get distance MAXINT */

6 /* If parent argument is non-null, also stores parent pointers in parent */

7 /* Assumes no negative-weight edges */

8 /* Runs in O(n + m log m) time. */

9 /* Note: uses pq structure from pq.c */

10 void dijkstra(Graph g, int source, int *dist, int *parent);

1 #include <stdlib.h>

2 #include <assert.h>

3 #include <values.h>

4

5 #include "graph.h"

6 #include "pq.h"

7 #include "dijkstra.h"

8

9 /* internal edge representation for dijkstra */

10 struct pq_elt {

11 int d; /* distance to v */

12 int u; /* source */

13 int v; /* sink */

14 };

15

16 static int

17 pq_elt_cmp(const void *a, const void *b)

18 {

19 return ((const struct pq_elt *) a)->d - ((const struct pq_elt *) b)->d;

20 }

21

22 struct push_data {

23 PQ pq;

24 int *dist;

25 };

26

27 static void push(Graph g, int u, int v, int wt, void *data)

28 {

29 struct push_data *d;

30 struct pq_elt e;

31

32 d = data;

33

34 e.d = d->dist[u] + wt;

35 e.u = u;

36 e.v = v;

37

38 pq_insert(d->pq, &e);

39 }

40

41 void

42 dijkstra(Graph g, int source, int *dist, int *parent)

43 {

44 struct push_data data;

45 struct pq_elt e;

46 int n;

47 int i;

48

49 assert(dist);

50

51 data.dist = dist;

52 data.pq = pq_create(sizeof(struct pq_elt), pq_elt_cmp);

53 assert(data.pq);

54

55 n = graph_vertex_count(g);

56

57 /* set up dist and parent arrays */

58 for(i = 0; i < n; i++) {

59 dist[i] = MAXINT;

60 }

61

62 if(parent) {

63 for(i = 0; i < n; i++) {

64 parent[i] = DIJKSTRA_NULL_PARENT;

65 }

66 }

67

68 /* push (source, source, 0) */

69 /* this will get things started with parent[source] == source */

70 /* and dist[source] == 0 */

71 push(g, source, source, -MAXINT, &data);

72

73 while(!pq_is_empty(data.pq)) {

74 /* pull the min value out */

75 pq_delete_min(data.pq, &e);

76

77 /* did we reach the sink already? */

78 if(dist[e.v] == MAXINT) {

79 /* no, it's a new edge */

80 dist[e.v] = e.d;

81 if(parent) parent[e.v] = e.u;

82

83 /* throw in the outgoing edges */

84 graph_foreach_weighted(g, e.v, push, &data);

85 }

86 }

87

88 pq_destroy(data.pq);

89 }