This file was hand-built from CS202/Notes on 2005-11-17, and may since have become out of date.

Contents

- WhyYouShouldKnowAboutMath

- So why do I need to learn all this nasty mathematics?

- But isn't math hard?

- Thinking about math with your heart

- WhatYouShouldKnowAboutMath

- Foundations and logic

- Fundamental mathematical objects

- Modular arithmetic and polynomials

- Linear algebra

- Graphs

- Counting

- Probability

- Tools

- PropositionalLogic

- PredicateLogic

- InferenceRules

- ProofTechniques

- SetTheory

- Naive set theory

- Operations on sets

- Axiomatic set theory

- Cartesian products, relations, and functions

- Constructing the universe

- Sizes and arithmetic

- Further reading

- NaturalNumbers

- Axioms

- Order properties

- PeanoAxioms

- InductionProofs

- Simple induction

- Strong induction

- Recursion

- Structural induction

- SummationNotation

- Summations

- Computing sums

- Products

- Other big operators

- RelationsAndFunctions

- StructuralInduction

- SolvingRecurrences

- The problem

- Guess but verify

- Converting to a sum

- The Master Theorem

- PigeonholePrinciple

- HowToCount

- What counting is

- Basic counting techniques

- Applying the rules

- An elaborate counting problem

- Further reading

- BinomialCoefficients

- Recursive definition

- Vandermonde's identity

- Sums of binomial coefficients

- Application: the inclusion-exclusion formula

- Negative binomial coefficients

- Fractional binomial coefficients

- Further reading

- GeneratingFunctions

- Basics

- Some standard generating functions

- More operations on formal power series and generating functions

- Counting with generating functions

- Generating functions and recurrences

- Recovering coefficients from generating functions

- Asymptotic estimates

- Recovering the sum of all coefficients

- A recursive generating function

- Summary of operations on generating functions

- Variants

- Further reading

- ProbabilityTheory

- History and interpretation

- Probability axioms

- Probability as counting

- Independence and the intersection of two events

- Union of two events

- Conditional probability

- Random variables

- RandomVariables

- Random variables

- The distribution of a random variable

- The expectation of a random variable

- The variance of a random variable

- Probability generating functions

- Summary: effects of operations on expectation and variance of random variables

- The general case

- Relations

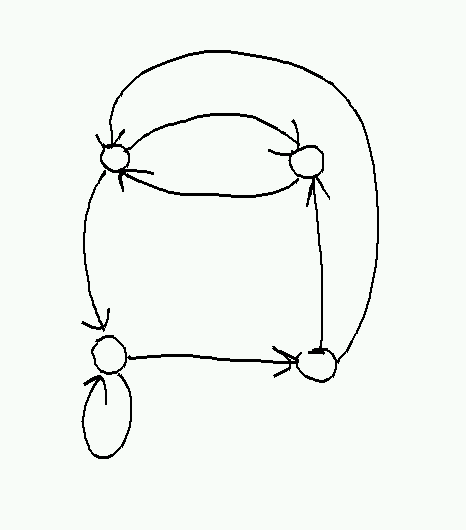

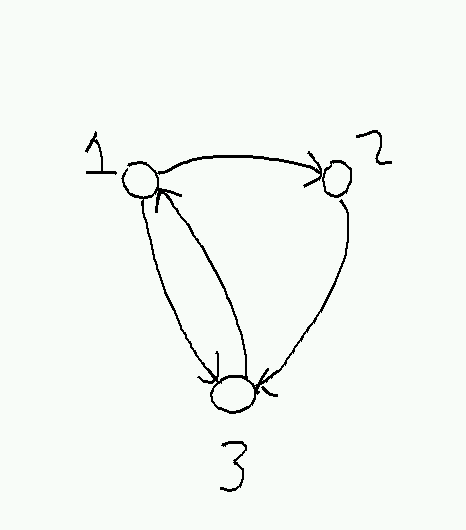

- Relations, digraphs, and matrices

- Operations on relations

- Classifying relations

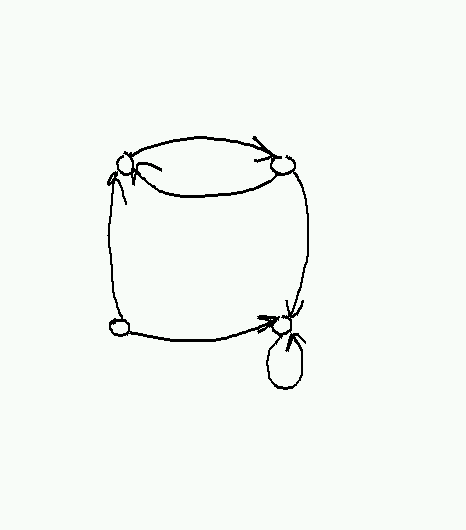

- Equivalence relations

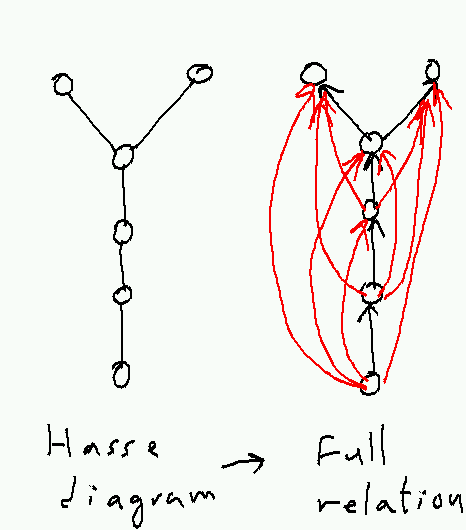

- Partial orders

- Closure

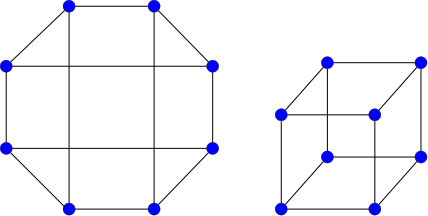

- GraphTheory

- Types of graphs

- Examples of graphs

- Graph terminology

- Some standard graphs

- Operations on graphs

- Paths and connectivity

- Cycles

- Proving things about graphs

- BipartiteGraphs

- Bipartite matching

- NumberTheory

- Divisibility and division

- Greatest common divisors

- The Fundamental Theorem of Arithmetic

- Modular arithmetic and residue classes

- DivisionAlgorithm

- ModularArithmetic

- ChineseRemainderTheorem

- GroupTheory

- Some common groups

- Arithmetic in groups

- Subgroups

- Homomorphisms and isomorphisms

- Cartesian products

- How to understand a group

- Subgroups, cosets, and quotients

- Homomorphisms, kernels, and the First Isomorphism Theorem

- Generators and relations

- Decomposition of abelian groups

- SymmetricGroup

- Why it's a group

- Cycle notation

- The role of the symmetric group

- Permutation types, conjugacy classes, and automorphisms

- Odd and even permutations

- AlgebraicStructures

- What algebras are

- Why we care

- Cheat sheet: axioms for algebras (and some not-quite algebras)

- Classification of algebras with a single binary operation (with perhaps some other operations sneaking in later)

- Operations on algebras

- Algebraic structures with more binary operations

- Polynomials

- Division of polynomials

- Divisors and greatest common divisors

- Factoring polynomials

- The ideal generated by an irreducible polynomial

- FiniteFields

- A magic trick

- Fields and rings

- Polynomials over a field

- Algebraic field extensions

- Applications

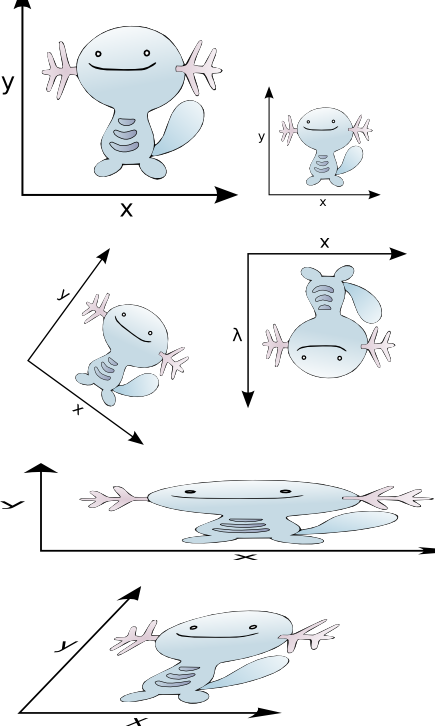

- LinearAlgebra

- Matrices

- Vectors

- Linear combinations and subspaces

- Linear transformations

- Further reading

1. WhyYouShouldKnowAboutMath

2. So why do I need to learn all this nasty mathematics?

Why you should know about mathematics, if you are interested in ComputerScience: or, more specifically, why you should take CS202 or a comparable course:

- Computation is something that you can't see and can't touch, and yet (thanks to the efforts of generations of hardware engineers) it obeys strict, well-defined rules with astonishing accuracy over long periods of time.

- Computations are too big for you to comprehend all at once. Imagine printing out an execution trace that showed every operation a typical $500 desktop computer executed in one (1) second. If you could read one operation per second, for eight hours every day, you would die of old age before you got halfway through. Now imagine letting the computer run overnight.

So in order to understand computations, we need a language that allows us to reason about things we can't see and can't touch, that are too big for us to understand, but that nonetheless follow strict, simple, well-defined rules. We'd like our reasoning to be consistent: any two people using the language should (barring errors) obtain the same conclusions from the same information. Computer scientists are good at inventing languages, so we could invent a new one for this particular purpose, but we don't have to: the exact same problem has been vexing philosophers, theologians, and mathematicians for much longer than computers have been around, and they've had a lot of time to think about how to make such a language work. Philosophers and theologians are still working on the consistency part, but mathematicians (mostly) got it in the early 20th-century. Because the first virtue of a computer scientist is laziness, we are going to steal their code.

3. But isn't math hard?

Yes and no. The human brain is not really designed to do formal mathematical reasoning, which is why most mathematics was invented in the last few centuries and why even apparently simple things like learning how to count or add require years of training, usually done at an early age so the pain will be forgotten later. But mathematical reasoning is very close to legal reasoning, which we do seem to be unusually good at. There is very little structural difference between the sentences

- "If x is in S, then x+1 is in S." (1)

and

- "If x is of royal blood, then x's child is of royal blood." (2)

but because the first is about boring numbers and the second is about fascinating social relationships and rules, most people have a much easier time deducing that to show somebody is royal we need to start with some known royal and follow a chain of descendants than they have deducing that to show that some number is in the set S. we need to start with some known element of S and show that repeatedly adding 1 gets us to the number we want. And yet to a logician these are the same processes of reasoning.

So why is statement (1) trickier to think about than statement (2)? Part of the difference is familiarity—we are all taught from an early age what it means to be somebody's child, to take on a particular social role, etc. For mathematical concepts, this familiarity comes with exposure and practice, just as with learning any other language. But part of the difference is that we humans are wired to understand and appreciate social and legal rules: we are very good at figuring out the implications of a (hypothetical) rule that says that any contract to sell a good to a consumer for $100 or more can be cancelled by the consumer within 72 hours of signing it provided the good has not yet been delivered, but we are not so good at figuring out the implications of a rule that says that a number is composite if and only if it is the product of two integer factors neither of which is 1. It's a lot easier to imagine having to cancel a contract to buy swampland in Florida that you signed last night while drunk than having to prove that 82 is composite. But again: there is nothing more natural about contracts than about numbers, and if anything the conditions for our contract to be breakable are more complicated than the conditions for a number to be composite.

4. Thinking about math with your heart

There are two things you need to be able to do to get good at mathematics (the creative kind that involves writing proofs, not the mechanical kind that involves grinding out answers according to formulas). One of them is to learn the language: to attain what mathematicians call mathematical maturity. You'll do that in CS202, if you pay attention. But the other is to learn how to activate the parts of your brain that are good at mathematical-style reasoning when you do math—the parts evolved to detect when the other primates in your primitive band of hunter-gatherers are cheating.

To do this it helps to get a little angry, and imagine that finishing a proof or unraveling a definition is the only thing that will stop your worst enemy from taking some valuable prize that you deserve. (If you don't have a worst enemy, there is always the UniversalQuantifier.) But whatever motivation you choose, you need to be fully engaged in what you are doing. Your brain is smart enough to know when you don't care about something, and if you don't believe that thinking about math is important, it will think about something else.

5. WhatYouShouldKnowAboutMath

List of things you should know about if you want to do ComputerScience.

6. Foundations and logic

Why: This is the assembly language of mathematics—the stuff at the bottom that everything else complies to.

- Propositional logic.

- Predicate logic.

- Axioms, theories, and models.

- Proofs.

- Induction and recursion.

7. Fundamental mathematical objects

Why: These are the mathematical equivalent of data structures, the way that more complex objects are represented.

- Naive set theory.

- Predicates vs sets.

- Set operations.

- Set comprehension.

- Russell's paradox and axiomatic set theory.

- Functions.

- Functions as sets.

- Injections, surjections, and bijections.

- Cardinality.

- Finite vs infinite sets.

- Sequences.

- Relations.

- Equivalence relations, equivalence classes, and quotients.

- Orders.

- The basic number tower.

- Countable universes: ℕ, ℤ, ℚ. (Can be represented in a computer.)

- Uncountable universes: ℝ, ℂ. (Can only be approximated in a computer.)

- Other algebras.

- The string monoid.

- ℤ/m and ℤ/p.

- Polynomials over various rings and fields.

8. Modular arithmetic and polynomials

Why: Basis of modern cryptography.

- Arithmetic in ℤ/m.

- Primes and divisibility.

- Euclid's algorithm and inverses.

- The Chinese Remainder Theorem.

- Fermat's Little Theorem and Euler's Theorem.

- RSA encryption.

- Galois fields and applications.

9. Linear algebra

Why: Shows up everywhere.

- Vectors and matrices.

- Matrix operations and matrix algebra.

- Geometric interpretations.

- Inverse matrices and Gaussian elimination.

10. Graphs

Why: Good for modeling interactions. Basic tool for algorithm design.

- Definitions: graphs, digraphs, multigraphs, etc.

- Paths, connected components, and strongly-connected components.

- Special kinds of graphs: paths, cycles, trees, cliques, bipartite graphs.

- Subgraphs, induced subgraphs, minors.

11. Counting

Why: Basic tool for knowing how much resources your program is going to consume.

- Basic combinatorial counting: sums, products, exponents, differences, and quotients.

- Combinatorial functions.

- Factorials.

- Binomial coefficients.

- The 12-fold way.

- Advanced counting techniques.

- Inclusion-exclusion.

- Recurrences.

- Generating functions.

12. Probability

Why: Can't understand randomized algorithms or average-case analysis without it. Handy if you go to Vegas.

- Discrete probability spaces.

- Events.

- Independence.

- Random variables.

- Expectation and variance.

- Probabilistic inequalities.

- Markov's inequality.

- Chebyshev's inequality.

- Chernoff bounds.

- Stochastic processes.

- Markov chains.

- Martingales.

- Branching processes.

13. Tools

Why: Basic computational stuff that comes up, but doesn't fit in any of the broad categories above. These topics will probably end up being mixed in with the topics above.

These you will have seen before:

- How to differentiate and integrate simple functions.

- Things you may have forgotten about exponents and logarithms.

These may be somewhat new:

- Inequalities and approximations.

- ∑ and ∏ notation.

- Computing or approximating the value of a sum.

- Asymptotics.

14. PropositionalLogic

15. PredicateLogic

16. InferenceRules

17. ProofTechniques

18. SetTheory

Set theory is the dominant foundation for mathematics. The idea is that everything else in mathematics—numbers, functions, etc.—can be written in terms of sets, so that if you have a consistent description of how sets behave, then you have a consistent description of how everything built on top of them behaves. If predicate logic is the machine code of mathematics, set theory would be assembly language.

Contents

19. Naive set theory

Naive set theory is the informal version of set theory that corresponds to our intuitions about sets as unordered collections of objects (called elements) with no duplicates. A set can be written explicitly by listing its elements using curly braces:

{ } = the empty set ∅, which has no elements.

{ Moe, Curly, Larry } = the Three Stooges.

- { 0, 1, 2, ... } = ℕ, the natural numbers. Note that we are relying on the reader guessing correctly how to continue the sequence here.

- { { }, { 0 }, { 1 }, { 0, 1 }, { 0, 1, 2 }, 7 } = a set of sets of natural numbers, plus a stray natural number that is directly an element of the outer set.

Membership in a set is written using the ∈ symbol (pronounced "is an element of" or "is in"). So we can write Moe ∈ The Three Stooges or 4 ∈ ℕ. We can also write ∉ for "is not an element of", as in Moe ∉ ℕ.

A fundamental axiom in set theory is that the only distinguishing property of a set is its list of members: if two sets have the same members, they are the same set.

For nested sets like { { 1 } }, ∈ represents only direct membership: the set { { 1 } } only has one element, { 1 }, so 1 ∉ { { 1 } }. This can be confusing if you think of ∈ as representing the English "is in," because if I put my lunch in my lunchbox and put my lunchbox in my backpack, then my lunch is in my backpack. But my lunch is not an element of { { my lunch }, my textbook, my slingshot }. In general, ∈ is not transitive (see Relations)—it doesn't behave like < unless there is something very unusual about the set you are applying it to—and there is no particular notation for being a deeply-buried element of an element of an element (etc.) of some set.

In addition to listing the elements of a set explicitly, we can also define a set by set comprehension, where we give a rule for how to generate all of its elements. This is pretty much the only way to define an infinite set without relying on guessing, but can be used for sets of any size. Set comprehension is usually written using set-builder notation, as in the following examples:

{ x | x∈ℕ ∧ x > 1 ∧ ∀y∈ℕ∀z∈ℕ yz = x ⇒ y = 1 ∨ z = 1 } = the prime numbers.

- { 2x | x∈ℕ } = the even numbers.

{ x | x∈ℕ ∧ x < 12 } = { 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11 }.

Sometimes the original set that an element has to be drawn from is put on the left-hand side of the pipe:

{ n∈ℕ | ∃x,y,z∈ℕ x > 0 ∧ y > 0 ∧ xn + yn = zn }. (This is a fancy name for the two-element set { 1, 2 }; see Fermat's Last Theorem.)

Using set comprehension, we can see that every set in naive set theory is equivalent to some predicate. Given a set S, the corresponding predicate is x∈S, and given a predicate P, the corresponding set is { x | Px }. But beware of Russell's paradox: what is { S | S∉S }?

20. Operations on sets

If we think of sets as representing predicates, each logical connective gives rise to a corresponding operation on sets:

A∪B = { x | x∈A ∨ x∈B }. The union of A and B.

A∩B = { x | x∈A ∧ x∈B }. The intersection of A and B.

A∖B = { x | x∈A ∧ x∉B }. The set difference of A and B.

A∆B = { x | x∈A ⊕ x∈B }. The symmetric difference of A and B.

(Of these, union and intersection are the most important in practice.)

Corresponding to implication is the notion of a subset:

- A⊆B ("A is a subset of B") if and only if ∀x x∈A ⇒ x∈B.

Sometimes one says A is contained in B if A⊆B. This is one of two senses in which A can be "in" B—it is also possible that A is in fact an element of B (A∈B). For example, the set A = { 12 } is an element of the set B = { Moe, Larry, Curly, { 12 } } but A is not a subset of B, because A's element 12 is not an element of B. Usually we will try to reserve "is in" for ∈ and "is contained in" for ⊆, but it's safest to use the symbols to avoid any possibility of ambiguity.

Finally we have the set-theoretic equivalent of negation:

Ā = { x | x∉A }. The set Ā is known as the complement of A.

If we allow complements, we are necessarily working inside some fixed universe: the complement of the empty set contains all possible objects. This raises the issue of where the universe comes from. One approach is to assume that we've already fixed some universe that we understand (e.g. ℕ), but then we run into trouble if we want to work with different classes of objects at the same time. Modern set theory is defined in terms of a collection of axioms that allow us to construct, essentially from scratch, a universe big enough to hold all of mathematics without apparent contradictions while avoiding the paradoxes that may arise in naive set theory.

21. Axiomatic set theory

The problem with naive set theory is that unrestricted set comprehension is too strong, leading to contradictions. Axiomatic set theory fixes this problem by being more restrictive about what sets one can form. The axioms most commonly used are known as Zermelo-Fraenkel set theory with choice or ZFC. The page AxiomaticSetTheory covers these axioms in painful detail, but in practice you mostly just need to know what constructions you can get away with.

The short version is that you can construct sets by (a) listing their members, (b) taking the union of other sets, or (c) using some predicate to pick out elements or subsets of some set. The starting points for this process are the empty set ∅ and the set ℕ of all natural numbers (suitably encoded as sets). If you can't construct a set in this way (like the Russell's Paradox set), odds are that it isn't a set.

These properties follow from the more useful axioms of ZFC:

- Extensionality

- Any two sets with the same members are equal.

- Existence

- ∅ is a set.

- Pairing

- For any given list of sets x, y, z, ..., { x, y, z, ... } is a set. (Strictly speaking, pairing only gives the existence of { x, y }, and the more general result requires the next axiom as well.)

- Union

- For any given set of sets { x, y, z, ... }, the set x ∪ y ∪ z ∪ ... exists.

- Power set

- For any set S, the power set ℘(S) = { A | A ⊆ S } exists.

- Specification

For any set S and any predicate P, the set { x∈S | P(x) } exists. This is called restricted comprehension. Limiting ourselves to constructing subsets of existing sets avoids Russell's Paradox, because we can't construct S = { x | x ∉ x }. Instead, we can try to construct S = { x∈T | x∉x }, but we'll find that S isn't an element of T, so it doesn't contain itself without creating a contradiction.

- Infinity

- ℕ exists, where ℕ is defined as the set containing ∅ and containing x∪{x} whenever it contains x. Here ∅ represents 0 and x∪{x} represents the successor operation. This effectively defines each number as the set of all smaller numbers, e.g. 3 = { 0, 1, 2 } = { ∅, { ∅ }, { ∅, { ∅ } } }. Without this axiom, we only get finite sets.

There are three other axioms that don't come up much in computer science:

- Foundation

Every nonempty set A contains a set B with A∩B=∅. This rather technical axiom prevents various weird sets, such as sets that contain themselves or infinite descending chains A0 ∋ A1 ∋ A2 ∋ ... .

- Replacement

- If S is a set, and R(x,y) is a predicate with the property that ∀x ∃!y R(x,y), then { y | ∃x∈S R(x,y) } is a set. Mostly used to construct astonishingly huge infinite sets.

- Choice

- For any set of nonempty sets S there is a function f that assigns to each x in S some f(x) ∈ x.

22. Cartesian products, relations, and functions

Sets are unordered: the set { a, b } is the same as the set { b, a }. Sometimes it is useful to consider ordered pairs (a, b), where we can tell which element comes first and which comes second. These can be encoded as sets using the rule (a, b) = { {a}, {a, b} }.

Given sets a and b, their Cartesian product a × b is the set { (x,y) | x ∈ a ∧ y ∈ b }, or in other words the set of all ordered pairs that can be constructed by taking the first element from a and the second from b. If a has n elements and b has m, then a × b has nm elements. For example, { 1, 2 } × { 3, 4 } = { (1,3), (1,4), (2,3), (2,4) }.

Because of the ordering, Cartesian product is not commutative in general. We usually have A×B ≠ B×A (exercise: when are they equal?).

The existence of the Cartesian product of any two sets can be proved using the axioms we already have: if (x,y) is defined as { {x}, {x,y} }, then ℘(a ∪ b) contains all the necessary sets {x} and {x,y}, and ℘℘(a ∪ b) contains all the pairs { {x}, {x,y} }. It also contains a lot of other sets we don't want, but we can get rid of them using Specification.

A subset of the Cartesian product of two sets is called a relation. An example would be the < relation on the natural numbers: { (0, 1), (0, 2), ...; (1, 2), (1, 3), ...; (2, 3), ... }. Just as sets can act like predicates of one argument (where Px corresponds to x ∈ P), relations act like predicates of two arguments. Relations are often written between their arguments, so xRy is shorthand for (x,y) ∈ R.

A special class of relations are functions. A function from a domain A to a codomain (or range) B is a relation on A and B (i.e., a subset of A × B) such that every element of A appears on the left-hand side of exactly one ordered pair. We write f: A⇒B as a short way of saying that f is a function from A to B, and for each x ∈ A write f(x) for the unique y ∈ B with (x,y) ∈ f. (Technically, knowing f alone does not tell you what the codomain is, since some elements of B may not show up at all; this can be fixed by representing a function as a pair (f,B), but it's generally not useful unless you are doing CategoryTheory.) Most of the time a function is specified by giving a rule for computing f(x), e.g. f(x) = x2.

Functions let us define sequences of arbitrary length: for example, the infinite sequence x0, x1, x2, ... of elements of some set A is represented by a function x:ℕ→A, while a shorter sequence (a0, a1, a2) would be represented by a function a:{0,1,2}→A. In both cases the subscript takes the place of a function argument: we treat xn as syntactic sugar for x(n). Finite sequences are often called tuples, and we think of the result of taking the Cartesian product of a finite number of sets A×B×C as a set of tuples (a,b,c) (even though the actual structure may be ((a,b),c) or (a,(b,c)) depending on which product operation we do first).

A function f:A→B that covers every element of B is called onto, surjective, or a surjection. If it maps distinct elements of A to distinct elements of B (i.e., if x≠y implies f(x)≠f(y)), it is called one-to-one, injective, or an injection. A function that is both surjective and injective is called a one-to-one correspondence, bijective, or a bijection. (The terms onto, one-to-one, and bijection are probably the most common, although injective and surjective are often used as well, as they avoid the confusion between one-to-one and one-to-one correspondence.) Any bijection f has an inverse f-1; this is the function { (y,x) | (x,y) ∈ f }. Two functions f:A→B and g:B→C can be composed to give a composition g∘f; g∘f is a function from A to C defined by (g∘f)(x) = g(f(x)).

Bijections let us define the size of arbitrary sets without having some special means to count elements. We say two sets A and B have the same size or cardinality if there exists a bijection f:A↔B. We can also define |A| formally as the (unique) smallest ordinal B such that there exists a bijection f:A↔B. This is exactly what we do when we do counting: to know that there are 3 stooges, we count them off 0 → Moe, 1 → Larry, 2 → Curly, giving a bijection between the set of stooges and 3 = { 0, 1, 2 }.

More on functions and relations can be found on the pages Functions and Relations.

23. Constructing the universe

With power set, Cartesian product, the notion of a sequence, etc., we can construct all of the standard objects of mathematics. For example:

- Integers

- The integers are the set ℤ = { ..., -2, -1, 0, -1, 2, ... }. We represent each integer z as an ordered pair (x,y), where x=0 ∨ y=0; formally, ℤ = { (x,y) ∈ ℕ×ℕ | x=0 ∨ y=0 }. The interpretation of (x,y) is x-y; so positive integers z are represented as (z,0) while negative integers are represented as (0,-z). It's not hard to define addition, subtraction, multiplication, etc. using this representation.

- Rationals

- The rational numbers ℚ are all fractions of the form p/q where p is an integer, q is a natural number not equal to 0, and p and q have no common factors.

- Reals

The real numbers ℝ can be defined in a number of ways, all of which turn out to be equivalent. The simplest to describe is that a real number x is represented by the set { y∈ℚ | y≤x }. Formally, we consider any subset of x of ℚ with the property y∈x ∧ z<y ⇒ z∈x to be a distinct real number (this is known as a Dedekind cut). Note that real numbers in this representation may be hard to write down.

We can also represent standard objects of computer science:

- Deterministic finite state machines

A deterministic finite state machine is a tuple (Σ,Q,q0,δ,Qaccept) where Σ is an alphabet (some finite set), Q is a state space (another finite set), q0∈Q is an initial state, δ:Q×Σ→Q is a transition function specifying which state to move to when processing some symbol in Σ, and Qaccept⊆Q is the set of accepting states. If we represent symbols and states as natural numbers, the set of all deterministic finite state machines is then just a subset of ℘ℕ×℘ℕ×ℕ×(ℕℕ×ℕ)×℘ℕ satisfying some consistency constraints.

24. Sizes and arithmetic

We can compute the size of a set by explicitly counting its elements; for example, |∅| = 0, | { Larry, Moe, Curly } | = 3, and | { x∈ℕ | x < 100 ∧ x is prime } | = 25. But sometimes it is easier to compute sizes by doing arithmetic. We can do this because many operations on sets correspond in a natural way to arithmetic operations on their sizes. (For much more on this, see HowToCount.)

Two sets A and B that have no elements in common are said to be disjoint; in set-theoretic notation, this means A∩B = ∅. In this case we have |A∪B| = |A|+|B|. The operation of disjoint union acts like addition for sets. For example, the disjoint union of 2-element set { 0, 1 } and the 3-element set { Wakko, Jakko, Dot } is the 5-element set { 0, 1, Wakko, Jakko, Dot }.

The size of a Cartesian product is obtained by multiplication: |A×B| = |A|⋅|B|. An example would be the product of the 2-element set { a, b } with the 3-element set { 0, 1, 2 }: this gives the 6-element set { (a,0), (a,1), (a,2), (b,0), (b,1), (b,2) }. Even though Cartesian product is not generally commutative, since ordinary natural number multiplication is, we always have |A×B| = |B×A|.

For power set, it is not hard to show that |℘(S)| = 2|S|. This is a special case of the size of AB, the set of all functions from B to A, which is |A||B|; for the power set we can encode P(S) using 2S, where 2 is the special set {0,1}.

24.1. Infinite sets

For infinite sets, we take the above properties as definitions of addition, multiplication, and exponentiation of their sizes. The resulting system is known as cardinal arithmetic, and the sizes that sets (finite or infinite) might have are known as cardinal numbers.

The finite cardinal numbers are just the natural numbers: 0, 1, 2, 3, ... . The first infinite cardinal number is the size of the set of natural numbers, and is written as ℵ0 ("aleph-zero," "aleph-null," or "aleph-nought"). The next infinite cardinal number is ℵ1 ("aleph-one"): it might or might not be the size of the set of real numbers, depending on whether you include the Generalized Continuum Hypothesis in your axiom system or not.

Infinite cardinals can behave very strangely. For example:

ℵ0+ℵ0=ℵ0. In other words, it is possible to have two sets A and B that both have the same size as ℕ, take their disjoint union, and get another set A+B that has the same size as ℕ. To give a specific example, let A = { 2x | x∈ℕ } and B = { 2x+1 | x∈ℕ }. These have |A|=|B|=|ℕ| because there is a bijection between each of them and ℕ built directly into their definitions. It's also not hard to see that A and B are disjoint, and A∪B = ℕ. So |A|=|B|=|A|+|B| in this case.

ℵ0⋅ℵ0=ℵ0. Example: A bijection between ℕ×ℕ and ℕ using the Cantor pairing function <x,y> = (x+y+1)(x+y)/2 + y. The first few values of this are <0,0> = 0, <1,0> = 2⋅1/2+0 = 1, <0,1> = 2⋅1/2+1 = 1, <2,0> = 3⋅2/2 + 0 = 3, <1,1> = 3⋅2/2 + 1 = 4, <0,2> = 3⋅2/2 + 2 = 5, etc. The basic idea is to order all the pairs by increasing x+y, and then order pairs with the same value of x+y by increasing y; eventually every pair is reached.

ℕ* = { all finite sequences of elements of ℕ } has size ℵ0. One way to do this to define a function recursively by setting f([]) = 0 and f([first, rest]) = 1+<first,f(rest)>, where first is the first element of the sequence and rest is all the other elements. In class, we did the example f(0,1,2) = 1+<0,f(1,2)> = 1+<0,1+<1,f(2)>> = 1+<0,1+<1,1+<2,0>>> = 1+<0,1+<1,1+3>> = 1+<0,1+<1,4>> = 1+<0,1+19> = 1+<0,20> = 1+230 = 231. This assigns a unique element of ℕ to each finite sequence, which is enough to show |ℕ*| ≤ |ℕ|; in fact, with some effort one can show that f is a bijection.

24.2. Countable sets

All of these sets have the property of being countable, which means that they can be put into a bijection with ℕ or one of its subsets. The general principle is that any sum or product of infinite cardinal numbers turns into taking the maximum of its arguments. The last case implies that anything you can write down using finitely many symbols (even if they are drawn from an infinite but countable alphabet) is countable. This has a lot of applications in computer science: one of them is that the set of all computer programs in any particular programming language is countable.

24.3. Uncountable sets

Exponentiation is different. We can easily show that 2ℵ₀ ≠ ℵ0, or equivalently that there is no bijection between ℘ℕ and ℕ. This is done using Cantor's diagonalization argument.

- Theorem

- Let S be any set. Then there is no surjection f:S→℘S.

- Proof

- Let f:S→℘S be some function from S to subsets of S. We'll construct a subset of S that f misses. Let A = { x∈S | x∉f(x) }. Suppose A = f(y). Then y∈A ↔ y∉A, a contradiction. (Exercise: Why does A exist even though the Russell's Paradox set doesn't?)

Since any bijection is also a surjection, this means that there's no bijection between S and ℘S either, implying, for example, that |ℕ| is strictly less than |℘ℕ|.

(On the other hand, it is the case that |ℕℕ| = |2ℕ|, so things are still weird up here.)

Sets that are larger than ℕ are called uncountable. A quick way to show that there is no surjection from A to B is to show that A is countable but B is uncountable. For example:

- Corollary

- There are functions f:ℕ→{0,1} that are not computed by any computer program.

- Proof

Let P be the set of all computer programs that take a natural number as input and always produce 0 or 1 as output (assume some fixed language), and for each program p ∈ P, let fp be the function that p computes. We've already argued that P is countable (each program is a finite sequence drawn from a countable alphabet), and since the set of all functions f:ℕ→{0,1} = 2ℕ has the same size as ℘ℕ, it's uncountable. So some f gets missed: there is at least one function from ℕ to {0,1} that is not equal to fp for any program p.

The fact that there are more functions from ℕ to ℕ than there are elements of ℕ is one of the reasons why set theory (slogan: "everything is a set") beat out lambda calculus (slogan: "everything is a function from functions to functions") in the battle over the foundations of mathematics. And this is why we do set theory in CS202 and lambda calculus gets put in CS201.

25. Further reading

See RosenBook §§2.1–2.2, BiggsBook Chapter 2, or Naive set theory.

26. NaturalNumbers

The natural numbers are the set ℕ = { 0, 1, 2, 3, .... }. These correspond to all possible sizes of finite sets; in a sense, the natural numbers are precisely those numbers that occur in nature: one can have a field with 0, 1, 2, 3, etc. sheep in it, but it's hard to have a field with -12 or 22/7 sheep in it.

Warning: While this is definition of ℕ is almost universally used in computer science, and is used in RosenBook, some mathematicians—including the author of BiggsBook—leave zero out of the natural numbers. There are several possible reasons why you might do this:

- You were born well before the invention of zero approximately two millenia back by the ancient Indians, Babylonians, and/or Mayans.

- You were taught by extremely conservative schoolmasters who still hadn't got a handle on this newfangled zero thing.

- You live in a country that is so rich in sheep that the thought of a field with no sheep in it seems unnatural.

- You are a number theorist, and you don't want to have follow "Let n be a natural number..." with "(except zero)" in every theorem you write.

My suspicion is that Biggs falls into the last category (and might fall into some of the earlier ones). For the purposes of CS202 we will adopt the usual convention in ComputerScience and start the naturals at zero. However, you should keep an eye out for assumptions that the natural numbers don't include zero. The terms positive integers (for {1, 2, 3, ...}) and non-negative integers (for {0, 1, 2, 3, ...}) can also be helpful for avoiding confusion.

27. Axioms

There are several different ways to define the naturals. That these definitions all yield the same object is one of the reasons why they are so natural.

27.1. Peano axioms

The PeanoAxioms define the natural numbers directly from logic. It is not hard to show that the usual natural numbers satisfy these axioms (whether or not you throw out zero). Unfortunately, the Peano axioms by themselves don't give us many of the usual operations (like addition and multiplication) that we expect to be able to do to numbers.

27.2. Set-theoretic definition

In SetTheory, a natural number is defined as a finite1 set x such that (a) every element y of x is also a subset of x, and (b) every element y of x is also a natural number. The existence of the set of natural numbers is asserted by the Axiom of Infinity. The smallest natural number is the empty set, which we take as representing 0. Next is 1 = { 0 }, 2 = { 0, 1 }, 3 = { 0, 1, 2 }, etc. It can easily be verified that each natural number defined in this way is indeed a subset of the next. It's not hard to show that these set-theoretic natural numbers satisfy the Peano axioms, and it's possible to define addition and multiplication operations on them that satisfy the arithmetic and order axioms below (we'll see more of this in HowToCount). Ordering is by inclusion: for the set-theoretic definition, n < m just in case n is an element of m.

27.3. Arithmetic and order axioms

Axioms for arithmetic in ℕ-{0} are given in Section 4.1 of BiggsBook. RosenBook doesn't spend much time on axiomatizing the naturals, relying instead on the deep intuition about natural numbers that most of us had trained into us from early childhood.

You've probably already seen all of the usual axioms early in your mathematical education, with the possible exception of mz = nz implies m = n. Note that unlike the other axioms, this one does not extend to ℕ, since m⋅0 = n⋅0 = 0 for any m and n. An extended list of axioms for ℕ including zero might look like

- a+b is in ℕ. [Closure under addition]

- a⋅b is in ℕ. [Closure under multiplication]

- a+b = b+a. [Commutativity of addition]

- (a+b)+c = a+(b+c). [Associativity of addition]

- ab = ba. [Commutativity of multiplication]

- (ab)c = a(bc). [Associativity of multiplication]

- There is an element 1 of ℕ such that n1 = n for all n. [Multiplicative identity]

- mz = nz implies m = n when z ≠ 0 (see below for 0). [Multiplicative cancellation]

- a(b+c) = ab+ac. [Distributivity of multiplication over addition]

For any m and n, exactly one of n < m, n = m, or n > m is true. [Trichotomy]

- There is an element 0 of ℕ such that n+0 = n for all n. [Additive identity]

- 0a = 0. [Multiplicative annihilator]

The numbering follows BiggsBook, except for the last two axioms, which don't appear in BiggsBook outside of Exercise 4.1.3.

Note that < is defined in terms of +. In our terms, n < m means that there exists some x such that x is not equal to 0 and n+x = m.

One problem with these axioms (as compared to the Peano axioms) is that they are not very restrictive: they work equally well for e.g. the non-negative reals or the non-negative rationals, and extend to all of the reals or the rationals if we adjust the definition of <. So the arithmetic axioms can't be used as a definition of the naturals, even though they are much more convenient for doing actual arithmetic than the more basic definitions.

Much of the work in logic in the early 20th century involved showing that addition, multiplication, etc. could be defined in terms of much more primitive operations like successor, and that the operations so defined behaved the way we'd expect. This program was not always popular with non-logicians: as Henri_Poincaré put it (quoted in Bell and Machover's A Course in Mathematical Logic, North-Holland, 1977): "On the contrary, I find nothing in logistic for the discoverer but shackles. It does not help us at all in the direction of conciseness, far from it: and if it requires 27 equations to establish that 1 is a number, how many will it requires to demonstrate a real theorem?" Thankfully, we get to build on these efforts, and can treat the arithmetic axioms as our own convenient library of lemmas rather than having to reach down into the raw logical swamps.

27.3.1. Formal definition of arithmetic operations in terms of successor

Here's a formal definition of + in terms of successor:

- ∀x 0+x = x.

- ∀x ∀y Sx+y = x+Sy.

This defines the sum of two natural numbers uniquely because we can use the second rule to move all the S's off of the first addend onto the second one, until we get down to zero (recall that under the PeanoAxioms a natural number like 37 is really just a convenient shorthand for SSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSSS0).

Here's multiplication:

- ∀x 0x = 0.

- ∀x ∀y (Sx)y = xy + y.

With the above rules for addition, this lets us multiply any two numbers very slowly: SS0⋅SS0 = (S0⋅SS0) + SS0 = ((0⋅SS0) + SS0) + SS0 = (0 + SS0) + SS0 = SS0 + SS0 = S0 + SSS0 = 0 + SSSS0 = SSSS0. Normal people write this as 2⋅2 = 4 and skip all the S's.

Here's <:

x < y ⇔ ∃z (z ≠ 0 ∧ x+z = y).

Note that this fails badly if z ranges over a larger set, like the integers.

With enough time on your hands, it is in principle possible to prove all of the arithmetic axioms given earlier hold for these definitions of +, ⋅, and <.

28. Order properties

The < relation is a total order (see Relations). This means that in addition to trichotomy, transitivity holds: a < b and b < c implies a < c. Transitivity can be proved from the definition of < and axioms 1-12; see Exercise 4.2.1 in BiggsBook.

However, < is even stronger than this: it is also a well order. This means that any subset of the natural numbers has a least element, which is the key to carrying out InductionProofs.

29. PeanoAxioms

30. InductionProofs

31. Simple induction

Most of the ProofTechniques we've talked about so far are only really useful for proving a property of a single object (although we can sometimes use generalization to show that the same property is true of all objects in some set if we weren't too picky about which single object we started with). Mathematical induction (which mathematicians just call induction) is a powerful technique for showing that some property is true for many objects, where you can use the fact that it is true for small objects as part of the proof that it is true for large objects.

The basic framework for induction is as follows: given a sequence of statements P(0), P(1), P(2), we'll prove that P(0) is true (the base case), and then prove that for all k, P(k) ⇒ P(k+1) (the induction step). We then conclude that P(n) is in fact true for all n.

31.1. Why induction works

There are three ways to show that induction works, depending on where you got your natural numbers from.

- Peano axioms

If you start with the PeanoAxioms, induction is one of them. Nothing more needs to be said.

- Well-ordering of the naturals

A set is well-ordered if every subset has a smallest element. (An example of a set that is not well-ordered is the integers ℤ.) If you build the natural numbers using 0 = { } and x+1 = x ∪ {x}, it is possible to prove that the resulting set is well-ordered. Because it is well-ordered, if P(n) does not hold for all n, there is a smallest n for which P(n) is false. But then either this n = 0, contradicting the base case, or P(n-1) is true (because otherwise n would not be the smallest) and P(n) is false, contradicting the induction step.

- Method of infinite descent

The original version, due to Fermat, goes like this: Suppose P(n) is false for some n > 0. Since P(n-1) ⇒ P(n) is logically equivalent to ¬P(n) ⇒ ¬P(n-1), we can conclude (using the induction step) ¬P(n-1). Repeat until you reach 0. The problem with this version is that the "repeat" step is in effect using an induction argument. The modern solution to this problem is to recast the argument to look like the well-ordering argument above, by assuming that n is the smallest n for which P(n) is false and asserting a contradiction once you prove ¬P(n-1). Historical note: Fermat may have used this technique to construct a plausible but invalid proof of his famous "Last Theorem" that an+bn=cn has no non-trivial integer solutions for n > 2.

31.2. Examples

The PigeonholePrinciple.

The number of subsets of an n-element set is 2n.

1+3+5+7+...+(2n+1) = (n+1)2.

2n > n2 for n ≥ 5.

32. Strong induction

Sometimes when proving that the induction hypothesis holds for n+1, it helps to use the fact that it holds for all n' < n+1, not just for n. This sort of argument is called strong induction. Formally, it's equivalent to simple induction: the only difference is that instead of proving ∀k P(k) ⇒ P(k+1), we prove ∀k (∀m≤k Q(m)) ⇒ Q(k+1). But this is exactly the same thing if we let P(k) ≡ ∀m≤k Q(m), since if ∀m≤k Q(m) implies Q(k+1), it also implies ∀m≤k+1 Q(m), giving us the original induction formula ∀k P(k) ⇒ P(k+1).

32.1. Examples

Every n > 1 can be factored into a product of one or more prime numbers. Proof: By induction on n. The base case is n = 2, which factors as 2 = 2 (one prime factor). For n > 2, either (a) n is prime itself, in which case n = n is a prime factorization; or (b) n is not prime, in which case n = ab for some a and b, both greater than 1. Since a and b are both less than n, by the induction hypothesis we have a = p1p2...pk for some sequence of one or more primes and similarly b = p'1p'2...p'k'. Then n = p1p2...pkp'1p'2...p'k' is a prime factorization of n.

Every deterministic bounded two-player perfect-information game that can't end in a draw has a winning strategy for one of the players. A perfect-information game is one in which both players know the entire state of the game at each decision point (like Chess or Go, but unlike Poker or Bridge); it is deterministic if there is no randomness that affects the outcome (this excludes Backgammon and Monopoly, some variants of Poker, and multiple hands of Bridge), and it's bounded if the game is guaranteed to end in at most a fixed number of moves starting from any reachable position (this also excludes Backgammon and Monopoly). Proof: For each position x, let b(x) be the bound on the number of moves made starting from x. Then if y is some position reached from x in one move, we have b(y) < b(x) (because we just used up a move). Let f(x) = 1 if the first player wins starting from position x and f(x) = 0 otherwise. We claim that f is well-defined. Proof: If b(x) = 0, the game is over, and so f(x) is either 0 or 1, depending on who just won. If b(x) > 0, then f(x) = max { f(y) | y is a successor to x } if it's the first player's turn to move and f(x) = min { f(y) | y is a successor to x } if it's the second player's turn to move. In either case each f(y) is well-defined (by the induction hypothesis) and so f(x) is also well-defined.

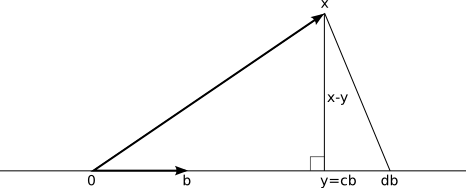

The DivisionAlgorithm: for each n,m ∈ ℕ there is a unique q∈ℕ and a unique r∈ℕ such that n = qm+r and0≤r<m. Proof: Fix m then proceed by induction on n. If n < m, then if q > 0 we have n = qm+r ≥ 1⋅m ≥ m, a contradiction. So in this case q = 0 is the only solution, and since n = qm + r = r we have a unique choice of r = n. If n ≥ m, by the induction hypothesis there is a unique q' and r' such that n-m = q'm+r' where 0≤r'<m. But then q = q'+1 and r = r' satisfies qm+r = (q'-1+1)m+r = (q'm+r') + m = (n-m) + m = n. To show that this solution is unique, if there is some other q'' and r'' such that q''m+r'' = n, then (q''-1)m + r'' = n-m = q'm+r', and by the uniqueness of q' and r' (ind. hyp. again), we have q''-1 = q' = q-1 and r'' = r' = r, giving that q'' = q and r'' = r. So q and r are unique.

33. Recursion

A definition with the structure of an inductive proof (give a base case and a rule for building bigger structures from smaller ones) Structures defined in this way are recursively-defined.

Examples of recursively-defined structures:

- Finite Von Neumann ordinals

- A finite von Neumann ordinal is either (a) the empty set ∅, or (b) x ∪ { x }, where x is a finite von Neumann ordinal.

- Complete binary trees

A complete binary tree consists of either (a) a leaf node, or (b) an internal node (the root) with two complete binary trees as children (or subtrees).

- Boolean formulas

- A boolean formula consists of either (a) a variable, (b) the negation operator applied to a Boolean formula, (c) the AND of two Boolean formulas, or (d) the OR of two Boolean formulas. A monotone Boolean formula is defined similarly, except that negations are forbidden.

- Finite sequences, recursive version

Before we defined a finite sequence as a function from some natural number (in its set form: n = { 0, 1, 2, ..., n-1 }) to some range. We could also define a finite sequence over some set S recursively, by the rule: [ ] (the empty sequence) is a finite sequence, and if a is a finite sequence and x∈S, then (x,a) is a finite sequence. (Fans of LISP will recognize this method immediately.)

Key point is that in each case the definition of an object is recursive---the object itself may appear as part of a larger object. Usually we assume that this recursion eventually bottoms out: there are some base cases (e.g. leaves of complete binary trees or variables in Boolean formulas) that do not lead to further recursion. If a definition doesn't bottom out in this way, the class of structures it describes might not be well-defined (i.e., we can't tell if some structure is an element of the class or not).

33.1. Recursively-defined functions

We can also define functions on recursive structures recursively:

- The depth of a binary tree

For a leaf, 0. For a tree consisting of a root with two subtrees, 1+max(d1, d2), where d1 and d2 are the depths of the two subtrees.

- The value of a Boolean formula given a particular variable assignment

- For a variable, the value (true or false) assigned to that variable. For a negation, the negation of the value of its argument. For an AND or OR, the AND or OR of the values of its arguments. (This definition is not quite as trivial as it looks, but it's still pretty trivial.)

Or we can define ordinary functions recursively:

- The Fibonacci series

Let F(0) = F(1) = 1. For n > 1, let F(n) = F(n-1) + F(n-2).

- Factorial

Let 0! = 1. For n > 0, let n! = n × (n-1)!.

33.2. Recursive definitions and induction

Recursive definitions have the same form as an induction proof. There are one or more base cases, and one or more recursion steps that correspond to the induction step in an induction proof. The connection is not surprising if you think of a definition of some class of objects as a predicate that identifies members of the class: a recursive definition is just a formula for writing induction proofs that say that certain objects are members.

Recursively-defined objects and functions also lend themselves easily to induction proofs about their properties; on general structures, such induction arguments go by the name of structural induction.

34. Structural induction

For finite structures, we can do induction over the structure. Formally we can think of this as doing induction on the size of the structure or part of the structure we are looking at.

Examples:

- Every complete binary tree with n leaves has n-1 internal nodes

Base case is a tree consisting of just a leaf; here n = 1 and there are n - 1 = 0 internal nodes. The induction step considers a tree consisting of a root and two subtrees. Let n1 and n2 be the number of leaves in the two subtrees; we have n1+n2 = n; and the number of internal nodes, counting the nodes in the two subtrees plus one more for the root, is (n1-1)+(n2-1)+1 = n1+n2 - 1 = n-1.

- Monotone Boolean formulas generate monotone functions

- What this means is that changing a variable from false to true can never change the value of the formula from true to false. Proof is by induction on the structure of the formula: for a naked variable, it's immediate. For an AND or OR, observe that changing a variable from false to true can only leave the values of the arguments unchanged, or change one or both from false to true (induction hypothesis); the rest follows by staring carefully at the truth table for AND or OR.

- Bounding the size of a binary tree with depth d

We'll show that it has at most 2d+1-1 nodes. Base case: the tree consists of one leaf, d = 0, and there are 20+1-1 = 2-1 = 1 nodes. Induction step: Given a tree of depth d > 1, it consists of a root (1 node), plus two subtrees of depth at most d-1. The two subtrees each have at most 2d-1+1-1 = 2d-1 nodes (induction hypothesis), so the total number of nodes is at most 2(2d-1)+1 = 2d+1+2-1 = 2d+1-1.

35. SummationNotation

36. Summations

Summations are the discrete versions of integrals; given a sequence xa, xa+1, ..., xb, its sum xa + xa+1 + ... + xb is written as

The large jagged symbol is a stretched-out version of a capital Greek letter sigma. The variable i is called the index of summation, a is the lower bound or lower limit, and b is the upper bound or upper limit. Mathematicians invented this notation centuries ago because they didn't have for loops; the intent is that you loop through all values of i from a to b (including both endpoints), summing up the argument of the ∑ for each i.

If b < a, then the sum is zero. For example,

![\[\sum_{i=0}^{-5} \frac{2^i \sin i}{i^3} = 0.\] \[\sum_{i=0}^{-5} \frac{2^i \sin i}{i^3} = 0.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_2982216f15a5c4641c2b0dc7deb0cfc78ca67dbc_p1.png)

This rule mostly shows up as an extreme case of a more general formula, e.g.

![\[\sum_{i=1}^{n} i = \frac{n(n+1)}{2},\] \[\sum_{i=1}^{n} i = \frac{n(n+1)}{2},\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_331516ba008cdd1c6b1cca4d32fcabcbe558fad5_p1.png)

which still works even when n=0 or n=-1 (but not for n=-2).

Summation notation is used both for laziness (it's more compact to write

than 1 + 3 + 5 + 7 + ... + (2n+1)) and precision (it's also more clear exactly what you mean).

36.1. Formal definition

For finite sums, we can formally define the value by either of two recurrences:

In English, we can compute a sum recursively by computing either the sum of the last n-1 values or the first n-1 values, and then adding in the value we left out. (For infinite sums we need a different definition; see below.)

36.2. Choosing and replacing index variables

When writing a summation, you can generally pick any index variable you like, although i, j, k, etc. are popular choices. Usually it's a good idea to pick an index that isn't used outside the sum. Though

![\[\sum_{n=0}^{n} n = \sum_{i=0}^{n} i\] \[\sum_{n=0}^{n} n = \sum_{i=0}^{n} i\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_434cd307ef49c57326bc756589bdf1a34bb51330_p1.png)

has a well-defined meaning, the version on the right-hand side is a lot less confusing.

In addition to renaming indices, you can also shift them, provided you shift the bounds to match. For example, rewriting

![\[\sum_{i=1}^{n} (i-1)\] \[\sum_{i=1}^{n} (i-1)\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_84a640fc331a8175961ab921e43b3435d62627c6_p1.png)

as

![\[\sum_{j=0}^{n-1} j\] \[\sum_{j=0}^{n-1} j\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_a180da0847e1959f92c9074e78c48a33b34bcdec_p1.png)

(by substituting j for i-1) makes the sum more convenient to work with.

36.3. Scope

The scope of a summation extends to the first addition or subtraction symbol that is not enclosed in parentheses or part of some larger term (e.g., in the numerator of a fraction). So

![\[\sum_{i=1}^{n} i^2 + 1 = \left(\sum_{i=1}^{n} i^2\right) + 1 = 1 + \sum_{i=1}^{n} i^2 \ne \sum_{i=1}^{n} (i^2+1).\] \[\sum_{i=1}^{n} i^2 + 1 = \left(\sum_{i=1}^{n} i^2\right) + 1 = 1 + \sum_{i=1}^{n} i^2 \ne \sum_{i=1}^{n} (i^2+1).\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_5b2128246043ac3fc3ed1297f06c555e6fbfb24a_p1.png)

Since this can be confusing, it is generally safest to wrap the sum in parentheses (as in the second form) or move any trailing terms to the beginning. An exception is when adding together two sums, as in

![\[\sum_{i=1}^{n} i^2 + \sum_{i=1}^{n^2} i = \left(\sum_{i=1}^{n} i^2\right) + \left(\sum_{i=1}^{n^2} i\right).\] \[\sum_{i=1}^{n} i^2 + \sum_{i=1}^{n^2} i = \left(\sum_{i=1}^{n} i^2\right) + \left(\sum_{i=1}^{n^2} i\right).\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_28d6ed7e4b8dd3d74601035f0de9f03173374412_p1.png)

Here the looming bulk of the second Sigma warns the reader that the first sum is ending; it is much harder to miss than the relatively tiny plus symbol in the first example.

36.4. Sums over given index sets

Sometimes we'd like to sum an expression over values that aren't consecutive integers, or may not even be integers at all. This can be done using a sum over all indices that are members of a given index set, or in the most general form satisfy some given predicate (with the usual set-theoretic caveat that the objects that satisfy the predicate must form a set). Such a sum is written by replacing the lower and upper limits with a single subscript that gives the predicate that the indices must obey.

For example, we could sum i2 for i in the set {3,5,7}:

![\[\sum_{i \in \{3,5,7\}} i^2 = 3^2 + 5^2 + 7^2 = 83.\] \[\sum_{i \in \{3,5,7\}} i^2 = 3^2 + 5^2 + 7^2 = 83.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_2656ef5af11c0abdc3e51e6ab5fcb82b61adab83_p1.png)

Or we could sum the sizes of all subsets of a given set S:

![\[\sum_{A \subseteq S} |A|.\] \[\sum_{A \subseteq S} |A|.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_fda638ea6fb3b686e2379f4e4fd4e5aa50280c0a_p1.png)

Or we could sum the inverses of all prime numbers less than 1000:

![\[\sum_{\mbox{\scriptsize $p < 1000$, $p$ is prime}} 1/p.\] \[\sum_{\mbox{\scriptsize $p < 1000$, $p$ is prime}} 1/p.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_4a4a15d96d318af055367b45e7d08648fae2ff44_p1.png)

Sometimes when writing a sum in this form it can be confusing exactly which variable or variables are the indices. The usual convention is that a variable is always an index if it doesn't have any meaning outside the sum, and if possible the index variable is put first in the expression under the Sigma if possible. If it is not obvious what a complicated sum means, it is generally best to try to rewrite it to make it more clear; still, you may see sums that look like

![\[\sum_{1 \le i < j \le n} \frac{i}{j}\] \[\sum_{1 \le i < j \le n} \frac{i}{j}\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_6f3c8dc6a140fc7cb631ba4bc9ea3cfe740d0a87_p1.png)

or

![\[\sum_{x \in A \subseteq S} |A|\] \[\sum_{x \in A \subseteq S} |A|\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_29eb73084e8e57b281aa85e29d6fb2b2cdf121df_p1.png)

where the first sum sums over all pairs of values (i,j) that satisfy the predicate, with each pair appearing exactly once, and the second sums over all sets A that are subsets of S and contain x (assuming x and S are defined outside the summation). Hopefully, you will not run into too many sums that look like this, but it's worth being able to decode them if you do.

Sums over a given set are guaranteed to be well-defined only if the set is finite. In this case we can use the fact that there is a bijection between any finite set S and the ordinal |S| to rewrite the sum as a sum over indices in |S|. For example, if |S| = n, then there exists a bijection f:{0..n-1}↔S, so we can define

![\[\sum_{i \in S} x_i = \sum_{i=0}^{n-1} x_{f(i)}.\] \[\sum_{i \in S} x_i = \sum_{i=0}^{n-1} x_{f(i)}.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_11a8f6cd8863463c88ffd7bf300f4db7879fbdc5_p1.png)

If S is infinite, this is trickier. For countable S, where there is a bijection f:ℕ↔S, we can sometimes rewrite

![\[\sum_{i \in S} x_i = \sum_{i=0}^{\infty} x_{f(i)}.\] \[\sum_{i \in S} x_i = \sum_{i=0}^{\infty} x_{f(i)}.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_d7694f037c0d52432bb74e6372530e59cc527f6d_p1.png)

and use the definition of an infinite sum (given below). Note that if the xi have different signs the result we get may depend on which bijection we choose. For this reason such infinite sums are probably best avoided unless you can explicitly use ℕ as the index set.

36.5. Sums without explicit bounds

When the index set is understood from context, it is often dropped, leaving only the index, as in ∑i i2. This will generally happen only if the index spans all possible values in some obvious range, and can be a mark of sloppiness in formal mathematical writing. Theoretical physicists adopt a still more lazy approach, and leave out the ∑i part entirely in certain special types of sums: this is known as the Einstein summation convention after the notoriously lazy physicist who proposed it.

36.6. Infinite sums

Sometimes you may see an expression where the upper limit is infinite, as in

![\[\sum_{i=0}^{\infty} \frac{1}{i^2}.\] \[\sum_{i=0}^{\infty} \frac{1}{i^2}.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_c6e3cf4b87ee8cd067da7db6d64efb9d63337ee5_p1.png)

The meaning of this expression is the limit of the series s obtained by taking the sum of the first term, the sum of the first two terms, the sum of the first three terms, etc. The limit converges to a particular value x if for any ε>0, there exists an N such that for all n > N, the value of sn is within ε of x (formally, |sn-x| < ε). We will see some examples of infinite sums when we look at GeneratingFunctions.

36.7. Double sums

Nothing says that the expression inside a summation can't be another summation. This gives double sums, such as in this rather painful definition of multiplication for non-negative integers:

![\[a \times b \stackrel{\mbox{\scriptsize def}}{=} \sum_{i=1}^{a} \sum_{j=1}^{b} 1.\] \[a \times b \stackrel{\mbox{\scriptsize def}}{=} \sum_{i=1}^{a} \sum_{j=1}^{b} 1.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_3b244240fff199363ecac4094e04e9216ed1f1a8_p1.png)

If you think of a sum as a for loop, a double sum is two nested for loops. The effect is to sum the innermost expression over all pairs of values of the two indices.

Here's a more complicated double sum where the limits on the inner sum depend on the index of the outer sum:

![\[\sum_{i=0}^{n} \sum_{j=0}^{i} (i+1)(j+1).\] \[\sum_{i=0}^{n} \sum_{j=0}^{i} (i+1)(j+1).\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_a425a2c80524bb1553416412660a04d047963edd_p1.png)

When n=1, this will compute (0+1)(0+1) + (1+1)(0+1) + (1+1)(1+1) = 7. For larger n the number of terms grows quickly.

There are also triple sums, quadruple sums, etc.

37. Computing sums

When confronted with some nasty sum, it is nice to be able to convert into a simpler expression that doesn't contain any Sigmas. It is not always possible to do this, and the problem of finding a simpler expression for a sum is very similar to the problem of computing an integral (see HowToIntegrate): in both cases the techniques available are mostly limited to massaging the summation until it turns into something whose simpler expression you remember. To do this, it helps to both (a) have a big toolbox of sums with known values, and (b) have some rules for manipulating summations to get them into a more convenient form. We'll start with the toolbox.

37.1. Some standard sums

Here are the three formula you should either memorize or remember how to derive:

Rigorous proofs of these can be obtained by induction on n.

For not so rigorous proofs, the second identity can be shown (using a trick alleged to have been invented by the legendary 18th-century mathematician Carl_Friedrich_Gauss at a frighteningly early age; see here for more details on this legend) by adding up two copies of the sequence running in opposite directions, one term at a time:

S = 1 + 2 + 3 + .... + n S = n + n-1 + n-2 + .... + 1 ------------------------------------------------------ 2S = (n+1) + (n+1) + (n+1) + .... + (n+1) = n(n+1),

and from 2S=n(n+1) we get S = n(n+1)/2.

For the last identity, start with

![\[\sum_{i=0}^{\infty} r^i = \frac{1}{1-r},\] \[\sum_{i=0}^{\infty} r^i = \frac{1}{1-r},\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_57b487bbab22927814b613c2e588aaaf626bd560_p1.png)

which holds when |r| < 1. The proof is that if

![\[S = \sum_{i=0}^{\infty} r^i\] \[S = \sum_{i=0}^{\infty} r^i\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_89eb60890414027cf75c0717e8cff17499c72afd_p1.png)

then

![\[rS = \sum_{i=0}^{\infty} r^{i+1} = \sum_{i=1}^{\infty} r^i\] \[rS = \sum_{i=0}^{\infty} r^{i+1} = \sum_{i=1}^{\infty} r^i\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_b4dd58b434d59f2febc967aea39406f66438da56_p1.png)

and so

![\[S-rS = r^0 = 1.\] \[S-rS = r^0 = 1.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_db76d66d4ad405c6dcaff85c9705d6c89a7589c0_p1.png)

Solving for S then gives S = 1/(1-r).

We can now get the sum up to n by subtracting off the extra terms starting with rn+1:

![\[\sum_{i=0}^{n} r^i = \sum_{i=0}^{\infty} r^i - r^{n+1} \sum_{i=0}^{\infty} r^i = \frac{1}{1-r} - \frac{r^{n+1}}{1-r} = \frac{1-r^{n+1}}{1-r}.\] \[\sum_{i=0}^{n} r^i = \sum_{i=0}^{\infty} r^i - r^{n+1} \sum_{i=0}^{\infty} r^i = \frac{1}{1-r} - \frac{r^{n+1}}{1-r} = \frac{1-r^{n+1}}{1-r}.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_c320cba1f5204b5396b2eba8deee6bc8a6b17985_p1.png)

Amazingly enough, this formula works even when r is greater than 1. If r is equal to 1, then the formula doesn't work (it requires dividing zero by zero), but there is an easier way to get the solution.

Other useful sums can be found in various places. RosenBook and ConcreteMathematics both provide tables of sums in their chapters on GeneratingFunctions. But it is usually better to be able to reconstruct the solution of a sum rather than trying to memorize such tables.

37.2. Summation identities

The summation operator is linear. This means that constant factors can be pulled out of sums:

![\[\sum_{i \in S} a x_i = a \sum_{i \in S} x_i\] \[\sum_{i \in S} a x_i = a \sum_{i \in S} x_i\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_085bada7dc9472d29e1c2056dc9992e45d4f65cb_p1.png)

and sums inside sums can be split:

![\[\sum_{i \in S} (x_i + y_i) = \sum_{i \in S} x_i + \sum_{i \in S} y_i.\] \[\sum_{i \in S} (x_i + y_i) = \sum_{i \in S} x_i + \sum_{i \in S} y_i.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_e462b830280a2e74c41a463ce9866919f2a9775d_p1.png)

With multiple sums, the order of summation is not important, provided the bounds on the inner sum don't depend on the index of the outer sum:

![\[\sum_{i \in S} \sum_{j \in T} x_{ij} = \sum_{j \in T} \sum_{i \in S} x_{ij}.\] \[\sum_{i \in S} \sum_{j \in T} x_{ij} = \sum_{j \in T} \sum_{i \in S} x_{ij}.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_fbf0625abef756a58c3bf4c585753c3a96bcf543_p1.png)

Products of sums can be turned into double sums of products and vice versa:

![\[\left(\sum_{i \in S} x_i\right)\left(\sum_{j \in T} y_j\right)

= \sum_{i \in S} \sum_{j \in T} x_i y_j.\] \[\left(\sum_{i \in S} x_i\right)\left(\sum_{j \in T} y_j\right)

= \sum_{i \in S} \sum_{j \in T} x_i y_j.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_996ead404602e11795dcc1ed817e0298e5d51303_p1.png)

These identities can often be used to transform a sum you can't solve into something simpler.

37.3. What to do if nothing else works

If nothing else works, you can try using the guess but verify method, which is a variant on the same method for identifying sequences. Here we write out the values of the sum for the first few values of the upper limit (for example), and hope that we recognize the sequence. If we do, we can then try to prove that a formula for the sequence of sums is correct by induction.

Example: Suppose we want to compute

![\[

S(n) = \sum_{k=1}^{n} (2k-1)

\] \[

S(n) = \sum_{k=1}^{n} (2k-1)

\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_ee8c69f7ecb0c67ac3fb18f1c3c74476b0b9e8f2_p1.png)

but that it doesn't occur to us to split it up and use the ∑k and ∑1 formulas. Instead, we can write down a table of values:

n |

S(n) |

0 |

0 |

1 |

1 |

2 |

1+3=4 |

3 |

1+3+5=9 |

4 |

1+3+5+7=16 |

5 |

1+3+5+7+9=25 |

At this point we might guess that S(n) = n2. To verify this, observe that it holds for n=0, and for larger n we have S(n) = S(n-1) + (2n-1) = (n-1)2 + 2n - 1 = n2 - 2n + 1 - 2n - 1 = n2. So we can conclude that our guess was correct.

If this doesn't work, you could always try using GeneratingFunctions.

37.4. Strategies for asymptotic estimates

Mostly in AlgorithmAnalysis we do not need to compute sums exactly, because we are just going to wrap the result up in some asymptotic expression anyway (see AsymptoticNotation). This makes our life much easier, because we only need an approximate solution.

Here's my general strategy for computing sums:

37.4.1. Pull out constant factors

Pull as many constant factors out as you can (where constant in this case means anything that does not involve the summation index). Example:

37.4.2. Bound using a known sum

See if it's bounded above or below by some other sum whose solution you already know. Good sums to try (you should memorize all of these):

37.4.2.1. Geometric series

The way to recognize a geometric series is that the ratio between adjacent terms is constant. If you memorize the second formula, you can rederive the first one. If you're Carl_Friedrich_Gauss, you can skip memorizing the second formula.

A useful trick to remember for geometric series is that if x is a constant that is not exactly 1, the sum is always big-Theta of its largest term. So for example

(the exact value is 2n+1-2), and

(the exact value is 1-2-n). This fact is the basis of the Master Theorem, described in SolvingRecurrences. If the ratio between terms equals 1, the formula doesn't work; instead, we have a constant series (see below).

37.4.2.2. Constant series

37.4.2.3. Arithmetic series

The simplest arithmetic series is

The way to remember this formula is that it's just n times the average value (n+1)/2. The way to recognize an arithmetic series is that the difference between adjacent terms is constant. The general arithmetic series is of the form

Because the general series expands so easily to the simplest series, it's usually not worth memorizing the general formula.

37.4.2.4. Harmonic series

Can be rederived using the integral technique given below or by summing the last half of the series, so this is mostly useful to remember in case you run across Hn (the n-th harmonic number).

37.4.3. Bound part of the sum

See if there's some part of the sum that you can bound. For example,

has a (painful) exact solution, or can be approximated by the integral trick described below, but it can very quickly be solved to within a constant factor by observing that

and

37.4.4. Integrate

Integrate. If f(n) is non-decreasing and you know how to integrate it, then

which is enough to get a big-Theta bound for almost all functions you are likely to encounter in algorithm analysis. If you don't remember how to integrate, see HowToIntegrate.

37.4.5. Grouping terms

Try grouping terms together. For example, the standard trick for showing that the harmonic series is unbounded in the limit is to argue that 1 + 1/2 + 1/3 + 1/4 + 1/5 + 1/6 + 1/7 + 1/8 + ... ≥ 1 + 1/2 + (1/4 + 1/4) + (1/8 + 1/8 + 1/8 + 1/8) + ... ≥ 1 + 1/2 + 1/2 + 1/2 + ... . I usually try everything else first, but sometimes this works if you get stuck.

37.4.6. Oddities

One oddball sum that shows up occasionally but is hard to solve using any of the above techniques is

If a < 1, this is Θ(1) (the exact formula for

when a < 1 is a/(1-a)2, which gives a constant upper bound for the sum stopping at n); if a = 1, it's just an arithmetic series; if a > 1, the largest term dominates and the sum is Θ(ann) (there is an exact formula, but it's ugly—if you just want to show it's O(ann), the simplest approach is to bound the series

by the geometric series

I wouldn't bother memorizing this one provided you bookmark this page.

37.4.7. Final notes

In practice, almost every sum you are likely to encounter in AlgorithmAnalysis will be of the form

where f(n) is exponential (so that it's bounded by a geometric series and the largest term dominates) or polynomial (so that f(n/2) = Θ(f(n)) and the sum is Θ(n f(n)) using the

lower bound).

ConcreteMathematics spends a lot of time on computing sums exactly. The most useful technique for doing this is to use GeneratingFunctions.

38. Products

What if you want to multiple a series of values instead of add them? The notation is the same as for a sum, except that you replace the Sigma with a Pi, as in this definition of the factorial function for non-negative n.

![\[n! \stackrel{\mbox{\scriptsize def}}{=} \prod_{i=1}^{n} i = 1 \cdot 2 \cdot \cdots \cdot n.\] \[n! \stackrel{\mbox{\scriptsize def}}{=} \prod_{i=1}^{n} i = 1 \cdot 2 \cdot \cdots \cdot n.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_1d385826ca3bffff43fbe9476e1ca312ba42dc05_p1.png)

The other difference is that while an empty sum is defined to have the value 0, an empty product is defined to have the value 1. The reason for this rule (in both cases) is that an empty sum or product should return the identity element for the corresponding operation—the value that when added to or multiplied by some other value x doesn't change x. This allows writing general rules like:

which holds as long as A∩B=Ø. Without the rule that the sum of an empty set was 0 and the product 1, we'd have to put in a special case for when one or both of A and B were empty.

Note that a consequence of this definition is that 0! = 1.

39. Other big operators

Some more obscure operators also allow you to compute some aggregate over a series, with the same rules for indices, lower and upper limits, etc., as ∑ and ∏. These include:

- Big AND:

![\[\bigwedge_{x \in S} P(x) \equiv P(x_1) \wedge P(x_2) \wedge \ldots \equiv \forall x \in S: P(x).\] \[\bigwedge_{x \in S} P(x) \equiv P(x_1) \wedge P(x_2) \wedge \ldots \equiv \forall x \in S: P(x).\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_e6651e2ab4896d7727685173d160c98d9fdb8ca2_p1.png)

- Big OR:

![\[\bigvee_{x \in S} P(x) \equiv P(x_1) \vee P(x_2) \vee \ldots \equiv \exists x \in S: P(x).\] \[\bigvee_{x \in S} P(x) \equiv P(x_1) \vee P(x_2) \vee \ldots \equiv \exists x \in S: P(x).\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_cf524b0eb075cd6d661f198cb1c393af94568acc_p1.png)

- Big Intersection:

![\[\bigcap_{i=1}^{n} A_i = A_1 \cap A_2 \cap \ldots \cap A_n.\] \[\bigcap_{i=1}^{n} A_i = A_1 \cap A_2 \cap \ldots \cap A_n.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_fdc7e227760302127f8ff48e2903b948500d5088_p1.png)

- Big Union:

![\[\bigcup_{i=1}^{n} A_i = A_1 \cup A_2 \cup \ldots \cup A_n.\] \[\bigcup_{i=1}^{n} A_i = A_1 \cup A_2 \cup \ldots \cup A_n.\]](/pinewiki/SummationNotation?action=AttachFile&do=get&target=latex_82e2381b46e0d4b2569faa80498d874949e01c4e_p1.png)

These all behave pretty much the way one would expect. One issue that is not obvious from the definition is what happens with an empty index set. Here the rule as with sums and products is to return the identity element for the operation. This will be True for AND, False for OR, and the empty set for union; for intersection, there is no identity element in general, so the intersection over an empty collection of sets is undefined.

40. RelationsAndFunctions

41. StructuralInduction

42. SolvingRecurrences

Notes on solving recurrences. These are originally from CS365, and emphasize asymptotic solutions; for CS202 we recommend also looking at GeneratingFunctions.

43. The problem

A recurrence or recurrence relation defines an infinite sequence by describing how to calculate the n-th element of the sequence given the values of smaller elements, as in:

- T(n) = T(n/2) + n, T(0) = T(1) = 1.

In principle such a relation allows us to calculate T(n) for any n by applying the first equation until we reach the base case. To solve a recurrence, we will find a formula that calculates T(n) directly from n, without this recursive computation.

Not all recurrences are solvable exactly, but in most of the cases that arises in analyzing recursive algorithms, we can usually get at least an asymptotic (i.e. big-Theta) solution (see AsymptoticNotation).

By convention we only define T by a recurrence like this for integer arguments, so the T(n/2) by convention represents either T(floor(n/2)) or T(ceiling(n/2)). If we want an exact solution for values of n that are not powers of 2, then we have to be precise about this, but if we only care about a big-Theta solution we will usually get the same answer no matter how we do the rounding.2 From this point on we will assume that we only consider values of n for which the recurrence relation does not produce any non-integer intermediate values.

44. Guess but verify